Spatial Intelligence and the Closed Loop: When AI Understanding of Physical Reality Enables Virtual Captivity

When Fei-Fei Li’s World Labs demonstrated Marble in late 2024, the reaction was immediate: from a handful of photographs, the system reconstructed explorable 3D spaces with startling fidelity. Users could navigate through bedrooms they’d grown up in, virtually walk through architectural plans not yet built, or explore scenes captured from different angles as if physically present.

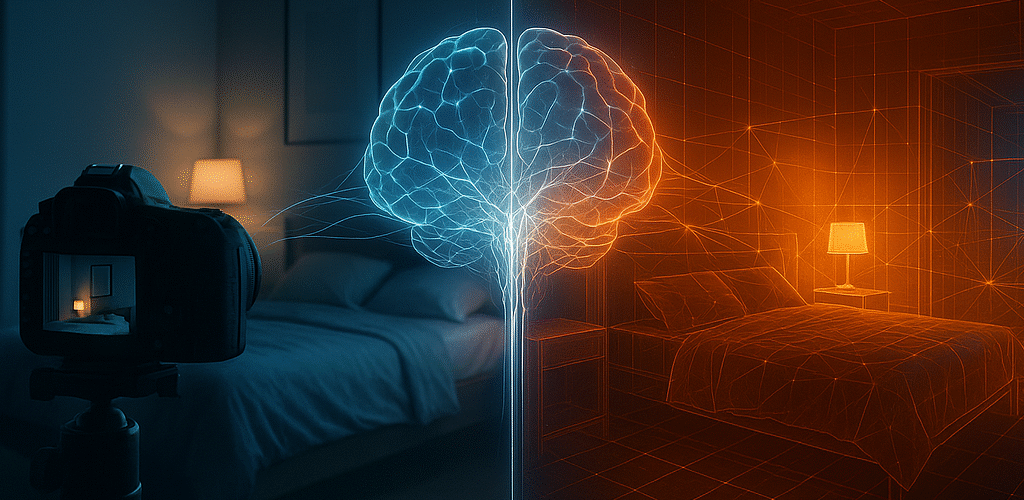

But beneath the surface-level amazement lies a more consequential realization. Spatial intelligence isn’t just another milestone in computer vision — it represents the final missing piece in a technology stack that could fundamentally alter how humans experience reality itself. To understand why, we need to look beyond Li’s stated applications of robotics and AR/VR, and examine what happens when AI systems can generate physically plausible 3D worlds that our brains cannot distinguish from actual perception.

This is the technical foundation for what we might call “brain-closed-loop” systems — where synthetic sensory inputs replace physical reality entirely. And if you’re looking for a preview of where this leads, Black Mirror has been documenting the endgame for years.

The Inflection Point: Why Spatial Intelligence Is Different

Large language models transformed how AI processes text. Diffusion models revolutionized image generation. But both operate in domains our brains recognize as abstractions. We know we’re reading generated text or viewing synthesized images because we maintain awareness of the medium.

Spatial intelligence is fundamentally different because it targets the deepest substrate of human cognition. As Li articulates in her spatial intelligence manifesto, “Spatial Intelligence is the scaffolding upon which our cognition is built. It’s at work when we passively observe or actively seek to create. It drives our reasoning and planning, even on the most abstract topics.”

Before spatial intelligence, AI systems had a critical blind spot: they didn’t understand how the physical world actually works. LLMs can describe gravity but don’t grasp what it means for an object to fall. Multimodal models can identify a coffee cup but have no concept of its weight, how it balances, or how liquid behaves inside it. This gap meant that AI-generated content, no matter how visually impressive, always contained subtle violations of physical law that triggered our brain’s “this isn’t real” detector.

Spatial intelligence closes this gap through several key innovations:

Geometric consistency: Systems like NeRF (Neural Radiance Fields), first introduced by Mildenhall et al. in 2020, can reconstruct 3D scenes from 2D images by learning a continuous volumetric representation. The network maps 5D coordinates — spatial location and viewing direction — to volume density and view-dependent radiance, creating photorealistic novel views that maintain proper perspective and occlusion.

Physical plausibility: World Labs’ approach goes beyond geometry to encode physical relationships. When Marble generates a 3D space, it doesn’t just arrange objects visually — it understands how they relate spatially, how light interacts with surfaces, how shadows fall. This is what Li means by “spatial intelligence” rather than mere 3D reconstruction.

Real-time rendering: 3D Gaussian Splatting, developed by Kerbl et al., achieves real-time rendering of complex 3D scenes by representing them as millions of small Gaussian “splats” rather than neural networks. This breakthrough enables interactive exploration of synthesized spaces at high frame rates — a crucial requirement for immersive experiences.

The technical significance is this: AI can now generate virtual environments that pass our brain’s spatial reasoning tests. Motion parallax works correctly. Object occlusion behaves naturally. Physical interactions feel right. When these systems reach sufficient fidelity, our spatial cognition — evolved over millions of years to navigate physical reality — can no longer reliably distinguish synthetic from real.

The Complete Technology Stack

Understanding spatial intelligence as an inflection point requires seeing it within a larger technical architecture. The pathway toward brain-closed-loop systems consists of three layers, each at different stages of maturity:

Layer One: Content Generation

This is where spatial intelligence slots in, and it’s the layer that just crossed a critical threshold:

- Text: Large language models can generate coherent, contextually appropriate text

- 2D Images: Diffusion models create photorealistic still images

- 3D Spaces: Spatial intelligence systems generate explorable, physically consistent environments

- Dynamic Scenes: Emerging systems can model how these spaces change over time

The key advancement is that AI can now produce the raw material for complete sensory experiences. World Labs demonstrates this with Marble: users input images or descriptions, and the system generates detailed 3D environments they can explore. The spaces aren’t just visually convincing — they follow physical rules about how objects exist in relation to each other.

Layer Two: Sensory Interface

This layer handles the technical challenge of delivering synthetic content to human senses:

- Visual: Current VR headsets already approach retinal resolution

- Auditory: Spatial audio systems create convincing directional sound

- Haptic: Force feedback and tactile systems provide basic touch sensation

- Proprioceptive: Brain-computer interfaces like Neuralink’s can potentially stimulate sensory cortex directly

Neuralink has made substantial progress here. As of early 2025, three individuals have received implants allowing them to control computer interfaces through neural signals. The company’s N1 device uses fine electrode threads inserted directly into the brain to capture neural activity. Critically, while reading brain signals (output) has been demonstrated, writing signals (input) to create sensory experiences remains experimental.

Layer Three: The Closed Loop

The convergence point where synthetic content meets neural interface:

Spatially-intelligent content (World Labs)

+

Multi-channel sensory delivery (VR + BCI)

=

Brain cannot distinguish virtual from physical

=

Closed loop established

Here’s the crucial insight: you don’t need perfect sensory coverage to achieve a closed loop. Research on VR immersion shows that visual and auditory channels alone can create strong presence effects. Our brains fill in missing sensory information based on what we do perceive — the same mechanism that makes Eulogy’s memory reconstruction compelling even though it relies primarily on visual cues.

Black Mirror’s Technological Unity

If this technical pathway sounds familiar, it should. Black Mirror has been systematically exploring brain-closed-loop scenarios for over a decade. What appeared to be disparate technologies across different episodes actually represents variations on a single underlying architecture:

USS Callister and Striking Vipers: Complete virtual worlds where users’ consciousness operates in synthetic environments. The technology reads neural intent (how users want to move, what they want to do) and writes sensory experience (what they see, hear, and feel). The spaces themselves must be spatially coherent for immersion to work — exactly what spatial intelligence enables.

San Junipero: A variant where consciousness persists in virtual space after physical death. The technical requirement is identical: generate convincing virtual environments and interface them with neural patterns that constitute subjective experience.

Playtest: A horror game that dynamically generates content based on the user’s fears, accessed through a spinal interface. The implant reads neural patterns to understand what terrifies the user, then writes synthetic experiences tuned to those patterns. The episode’s central terror comes from the interface creating experiences indistinguishable from reality.

White Christmas: Consciousness is copied and placed in isolated virtual environments for various purposes. The “cookies” experience time and space entirely through synthetic sensory input — they’re consciousness in closed loops.

Men Against Fire: Soldiers have neural implants that override visual perception, making them see humans as threatening “roaches.” This represents selective sensory override — the brain-computer interface doesn’t need to generate complete environments, just modify specific perceptual channels.

The technical pattern is consistent across all these scenarios:

- Generate convincing virtual content (what spatial intelligence enables)

- Interface with neural perception (what BCIs enable)

- Result: subjective experience decouples from physical reality

The differences between episodes are implementation details: single-player versus multiplayer, temporary versus permanent, voluntary versus coerced. But the underlying technology stack is unified.

From Spatial Intelligence to Closed Loop: Gap Analysis

So how far are we from these scenarios becoming technically feasible? Let’s examine the remaining gaps:

Gap 1: Content Generation Fidelity (Rapidly Closing)

Status: World Labs’ Marble can generate explorable 3D spaces from limited input. NeRF and 3D Gaussian Splatting achieve photorealistic rendering.

Remaining challenge: Real-time generation of fully dynamic, interactive environments at scale. Current systems excel at reconstructing static scenes; generating novel spaces with complex physics and interaction remains computationally demanding.

Timeline: The technical trajectory suggests this gap will close within several years. The fundamental breakthroughs have happened; what remains is engineering optimization.

Gap 2: Display Technology (Largely Solved)

Status: Modern VR headsets approach retinal resolution, achieve low-latency tracking, and support room-scale movement. Spatial audio is convincing.

Remaining challenge: Field of view limitations, weight, battery life — essentially engineering refinements rather than fundamental research problems.

Timeline: Current generation devices already enable strong immersion effects. Incremental improvements will continue, but the core capability exists.

Gap 3: Haptic Feedback (Partial Solution)

Status: Controllers provide vibration and basic force feedback. Gloves and suits offer limited tactile sensation.

Remaining challenge: Full-body haptic feedback, temperature sensation, pain simulation (if desired). These are harder engineering problems.

Key insight: Incomplete haptic feedback may not matter much. Our brains are remarkably good at filling in missing sensory information when other channels are convincing. This is why people report strong presence in VR despite limited touch feedback.

Gap 4: Brain-Computer Interfaces (Biggest Bottleneck)

Status: Neuralink and competitors can read neural signals to decode intent (moving cursor, typing). This enables control of virtual environments.

Remaining challenge: Writing sensory information directly to cortex at sufficient resolution and bandwidth. This is the most difficult technical barrier.

Critical question: Is direct neural writing necessary? A less invasive path uses synthetic environments (spatial intelligence) delivered through conventional displays (VR/AR). This “peripheral” approach might achieve closed-loop effects without requiring BCI.

Timeline: Reading neural signals for control: functional now. Writing rich sensory information: feasibly a decade or more for invasive approaches, potentially much longer for non-invasive methods.

The Minimum Viable Closed Loop

Here’s the provocative implication: we may not need to wait for all gaps to close. A minimally viable closed loop might only require:

- High-fidelity 3D environments (spatial intelligence) ✓

- Immersive visual/auditory delivery (current VR) ✓

- Extended immersion time ✓

- Compelling content/social dynamics ✓

The brain’s tendency to fill in gaps and adapt to virtual environments means that with sufficient time and motivation to remain immersed, even current-generation technology can create scenarios where users increasingly prefer synthetic to physical experiences — not because they’re deceived, but because they find the virtual environment more rewarding.

This is already observable in heavy VR users who report that physical reality feels less “real” after extended virtual sessions. The loop doesn’t need to be perfect; it just needs to be preferable.

The Design Crossroads

This brings us to the central question: Li’s spatial intelligence technology can follow two radically different paths.

Path A: Enhancement

Vision: AI understands the physical world to help humans navigate and interact with it more effectively.

Applications:

- Robots that can safely operate in human environments

- AR systems that provide useful environmental information

- Architectural and design tools that let creators visualize projects

- Medical systems that help surgeons plan procedures in 3D

Key characteristic: Technology mediates between humans and physical reality, but humans remain embodied and physically situated. The locus of experience stays in the physical world.

Path B: Replacement

Vision: AI generates synthetic worlds that replace rather than enhance physical experience.

Applications:

- Immersive virtual environments for entertainment

- Social platforms in synthetic spaces

- Training simulations that feel indistinguishable from reality

- Eventually, persistent virtual existence

Key characteristic: Technology creates alternative reality that becomes primary locus of experience. Physical embodiment becomes optional or purely functional (maintaining biological processes while consciousness operates elsewhere).

The critical observation: Both paths use identical technology. The same spatial intelligence that lets a robot navigate a room can generate a virtual room a human navigates. The difference isn’t technical capability — it’s design intent and system architecture.

Design Choices That Matter

If we want spatial intelligence to enable enhancement rather than drift toward replacement, several design principles become crucial:

1. Physical Anchoring

Enhancement approach: Systems that maintain awareness of physical context. AR rather than VR as default paradigm. Time limits on immersive sessions. Requirements for physical movement and environmental awareness.

Replacement approach: Systems optimized for prolonged immersion. Comfortable seated or reclining positions. Social dynamics that reward extended presence. Economic incentives tied to time spent in virtual space.

The design choice: Do we build systems that pull users back to physical reality, or ones that make staying in synthetic reality increasingly comfortable?

2. Transparency of Synthesis

Enhancement approach: Clear labeling of AI-generated content. Users always know when they’re interacting with synthetic versus captured environments. Distinct visual/audio markers for virtual elements.

Replacement approach: Seamless blending of real and synthetic. Virtual environments designed to be indistinguishable from physical ones. Social pressures against “breaking immersion” by acknowledging artificiality.

The design choice: Do we design for informed consent about the nature of experience, or for maximum immersion?

3. Exit Design

Enhancement approach: Systems designed for easy disengagement. Low switching costs between virtual and physical. No penalties for reduced usage. Social structures that value both modes of experience.

Replacement approach: High switching costs (social connections exist primarily in virtual space). Network effects that make leaving costly. Economic or social penalties for non-participation.

The design choice: Do we design exits, or do we design stickiness?

4. The Embodiment Question

Enhancement approach: Technology extends physical capabilities but maintains primacy of physical embodiment. Robots and AR systems that operate in shared physical space.

Replacement approach: Physical body becomes life-support system while subjective experience occurs in synthetic spaces. Embodiment becomes optional or configurable (as in Striking Vipers’ avatar bodies).

The design choice: Do we design for enhanced physical presence or synthetic presence?

Not Technological Determinism

The crucial point is this: spatial intelligence doesn’t inevitably lead to Black Mirror scenarios. The technology enables both enhancement and replacement paths. Which one we get depends on deliberate design choices made now, while these systems are still being architected.

However, there’s an uncomfortable economic reality: replacement paths are often more profitable. Systems that maximize engagement time, create strong network effects, and establish high switching costs tend to generate more value for platform operators. The market, left to its own devices, has incentives to build toward closed loops rather than enhancements.

This is why design choices matter at the foundational level. If spatial intelligence systems are architected with enhancement principles — physical anchoring, transparency, easy exits, primacy of embodiment — those constraints become embedded in the infrastructure. If they’re built purely for maximum immersion and engagement, the replacement path becomes default.

We’re at the inflection point now. World Labs’ Marble, Neuralink’s progress, the rapid advancement of VR technology — these aren’t separate developments but convergent pieces of a larger system. The technical capability for brain-closed-loop scenarios is approaching feasibility.

The question isn’t whether AI will be able to generate virtual worlds indistinguishable from physical reality. Spatial intelligence has fundamentally answered that: yes, it will. The question is what we choose to build with that capability.

The Developer’s Choice

If you’re working on spatial reconstruction algorithms, training world models, or designing immersive interfaces, you’re not just solving technical problems. You’re building infrastructure that could either enhance human agency in physical reality or enable wholesale replacement of physical experience with synthetic alternatives.

This isn’t a philosophical question — it’s a design question. It comes down to specific technical decisions:

- Do you optimize for extended immersion or periodic engagement with physical reality?

- Do you design for transparency about synthetic content or maximum believability?

- Do you build in friction for exits or optimize for retention?

- Do you architect for physical anchoring or pure virtuality?

The technology will enable both paths. Which one we get depends on the choices made in system architecture, interface design, and business model structure. These choices are being made right now, in labs and startups working on spatial intelligence, brain-computer interfaces, and immersive systems.

Li’s spatial intelligence is indeed revolutionary — not because it solves robotics or enables AR, though it does those things. It’s revolutionary because it completes the technical stack necessary for human consciousness to operate primarily in synthetic rather than physical reality. Whether that capability becomes liberating tool or inescapable trap depends on intentional design choices, not technological inevitability.

The question for anyone building in this space: Are you architecting for enhancement or replacement? Because the default path — the one that requires no conscious intervention — leads straight to the closed loop.

References

[1] Li, F. F. (2024). From Words to Worlds: Spatial Intelligence is AI’s Next Frontier. Manifesto [2] World Labs. (2024). Marble: Foundation for Spatially Intelligent Future. Product [3] Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., & Ng, R. (2020). NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Paper [4] Kerbl, B., Kopanas, G., Leimkühler, T., & Drettakis, G. (2023). 3D Gaussian Splatting for Real-Time Radiance Field Rendering. Paper [5] Neuralink. (2025). Progress Updates on Brain-Computer Interface Development. Updates [6] MIT Technology Review. (2025). What to Expect from Neuralink in 2025. Analysis [7] Brooker, C. (Creator). (2011–2023). Black Mirror [TV Series]. Netflix/Channel 4.

This story is published on Generative AI. Connect with us on LinkedIn and follow Zeniteq to stay in the loop with the latest AI stories.

Subscribe to our newsletter and YouTube channel to stay updated with the latest news and updates on generative AI. Let’s shape the future of AI together!

Spatial Intelligence and the Closed Loop: When AI Understanding of Physical Reality Enables Virtual… was originally published in Generative AI on Medium, where people are continuing the conversation by highlighting and responding to this story.

Source link

Add comment