a topic of much interest since it was introduced by Microsoft in early 2024. While most of the content online focuses on the technical implementation, from a practitioner’s perspective, it would be worthwhile to explore when the incremental value of GraphRAG over naïve RAG would justify the additional architectural complexity and investment. So here, I will attempt to answer the following questions crucial for a scalable and robust GraphRAG design:

- When is GraphRAG needed? What factors would help you decide?

- If you decide to implement GraphRAG, what design principles should you keep in mind to balance complexity and value?

- Once you have implemented GraphRAG, will you be able to answer any and all questions about your document store with equal accuracy? Or are there limits you should be aware of and implement methods to overcome them wherever feasible?

GraphRAG vs Naïve RAG Pipeline

In this article, all figures are drawn by me, images generated using Copilot and documents (for graph) generated using ChatGPT.

A typical naïve RAG pipeline would look as follows:

In contrast, a GraphRAG embedding pipeline would be as the following. The retrieval and response generation steps would be discussed in a later section.

While there can be variations of how the GraphRAG pipeline is built and the context retrieval is done for response generation, the key differences with naïve RAG can be summarised as follows:

- During data preparation, documents are parsed to extract entities and relations, then stored in a graph

- Optionally, but preferably, embed the node values and relations using an embedding model and store for semantic matching

- Finally, the documents are chunked, embedded and indexes stored for similarity retrieval. This step is common with naïve RAG.

When is GraphRAG needed?

Consider the case of a search assistant for Law Enforcement, with the corpus being investigation reports filed over the years in voluminous documents. Each report has a Report ID mentioned at the top of the first page of the document. The rest of the document describes the persons involved and their roles (accused, victims, witnesses, enforcement personnel etc), applicable legal provisions, incident description, witness statements, assets seized etc.

Although I shall be focusing on the Design principle here, for technical implementation, I used Neo4j as the Graph database, GPT-4o for entity and relations extraction, reasoning and response and text-embedding-3-small for embeddings.

The following factors should be taken into account for deciding if GraphRAG is needed:

Long Documents

A naive RAG would lose context or relationships between data points due to the chunking process. So a query such as “What is the Report ID where car no. PYT1234 was involved?” is not likely to give the right answer if the car no. is not located in the same chunk as the Report ID, and in this case, the Report ID would be located in the first chunk. Therefore, if you have long documents with lots of entities (people, places, institutions, asset identifiers etc) spread across the pages and would like to query for relations between them, consider GraphRAG.

Cross-Document Context

A naïve RAG cannot connect information across multiple documents. If your queries require cross-linking of entities across documents, or aggregations over the entire corpus, you will need GraphRAG. For instance, queries such as:

“How many burglary reports are from Mumbai?”

“Are there individuals accused in multiple cases? What are the relevant Report IDs?”

“Tell me details of cases related to Bank ABC”

These kinds of analytics-based queries are expected in a corpus of related documents, and enable identification of patterns across unrelated events. Another example could be a hospital management system where given a set of symptoms, the application should respond with similar previous patient cases and the lines of treatment adopted.

Given that most real-world applications require this capability, are there applications where GraphRAG would be an overkill and naive RAG is good enough? Possibly, such as for datasets such as company HR policies, where each document deals with a distinct topic (vacation, payroll, health insurance etc.) and the structure of the content is such that entities and their relations, along with cross-document linkages are usually not the focus of queries.

Search Space Optimization

While the above capabilities of GraphRAG are generally known, what is less evident is that it is an excellent filter through which the search space for a query can be narrowed down to the most relevant documents. This is extremely important for a large corpus consisting of thousands or millions of documents. A vector cosine similarity search would simply lose granularity as the number of chunks increase, thereby degrading the quality of chunks selected for a query context.

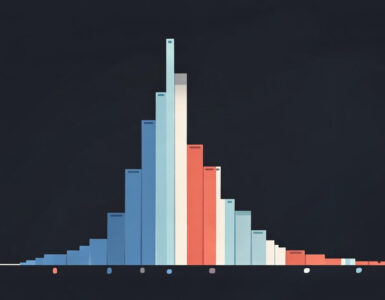

This is not hard to visualize, since geometrically speaking, a normalised unit vector representing a chunk is just a dot on the surface of a N dimensional sphere (N being the number of dimensions generated by the embedding model), and as more and more dots are packed into the area, they overlap with each other and become dense, to the point that it is hard to distinguish any one dot from its neighbors when a cosine match is calculated for a given query.

Explainability

This is a corollary to the dense embedding search space. It is not easily explained why certain chunks are matched to the query and not another, as semantic matching accuracy using cosine similarity reaches a threshold, beyond which techniques such as prompt enrichment of the query before matching will stop improving the quality of chunks retrieved for context.

GraphRAG Design principles

For a practical solution balancing complexity, effort and cost, the following principles should be considered while designing the Graph:

What nodes and relations should you extract?

It is tempting to send the full document to the LLM and ask it to extract all entities and their relations. Indeed, it will try to do this if you invoke ‘LLMGraphTransformer’ of Neo4j without a custom prompt. However, for a large document (10+ pages), this query will take a very long time and the result will also be sub-optimal due to the complexity of the task. And when you have thousands of documents to process, this approach will not work. Instead, focus on the most important entities and relations that will be frequently referred to in queries. And create a star graph connecting all these entities to the central node (which is the Report ID for the Crime database, could be patient id for a hospital application and so on).

For instance, for the Crime Reports data, the relation of the person to the Report ID is important (accused, witness etc), whereas whether two people belong to the same family perhaps less so. However, for a genealogy search, familial relation is the core reason for building the application .

Mathematically also, it is easy to see why a star graph is a better approach. A document with K entities can have potentially KC2 relations, assuming there exists only one type of relation between two entities. For a document with 20 entities, that would mean 190 relations. On the other hand, a star graph connecting 19 of the nodes to 1 key node would mean 19 relations, a 90% reduction in complexity.

With this approach, I extracted persons, places, vehicle plate numbers, amounts and institution names only (but not legal section ids or assets seized) and connected them to the Report ID. A graph of 10 Case reports looks like the following and takes only a couple of minutes to generate.

Adopt complexity iteratively

In the first phase (or MVP) of the project, focus on the most high-value and frequent queries. And build the graph for entities and relations in those. This should suffice ~70-80% of the search requirements. For the remaining, you can enhance the graph in subsequent iterations, find additional nodes and relations and merge with the existing graph cluster. A caveat to this is that as new data keeps getting generated (new cases, new patients etc), these documents have to be parsed for all the entities and relations in one go. For instance, in a 20 entity graph cluster, the minimal star cluster has 19 relations and 1 key node. And assume in the next iteration, you add assets seized, and create 5 additional nodes and say, 15 more relations. However, if this document had come as a new document, you would need to create 25 entities and 34 relations between them in one extraction job.

Use the graph for classification and context, not for user responses directly

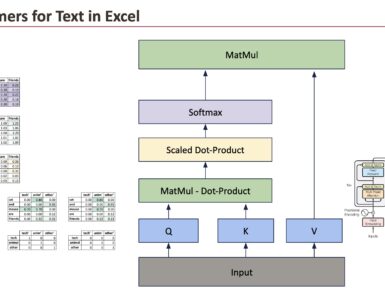

There could be a few variations to the Retrieval and Augmentation pipeline, depending on whether/how you use the semantic matching of graph nodes and elements, and after some experimentation, I developed the following:

The steps are as below:

- The user query is used to retrieve the relevant nodes and relations from the graph. This happens in two steps. First, the LLM composes a Neo4j cypher query from the given user query. If the query succeeds, we have an exact match of the criteria given in the user query. For example: In the graph I created, a query like “How many reports are there from Mumbai?” will get an exact hit, since in my data, Mumbai is connected to multiple Report clusters

- If the cypher does not yield any records, the query would fallback to matching semantically to the graph node values and relations and find the most similar matches. This is useful in case the query is like “How many reports are there from Bombay?”, which will result in getting the Report IDs related to Mumbai, which is the correct result. However, the semantic matching needs to be carefully controlled, and can result in false positives, which I shall explain more in the next section.

- Note that in both of the above methods we try to extract the full cluster around the Report ID connected to the query node so we can give as much accurate context as possible to the chunk retrieval step. The logic is as follows:

- If the user query is asking about a report with its Id (eg: tell me details about report SYN-REP-1234), we get the entities connected to the Id (people, persons, institutions etc). So while this query on its own rarely gets the right chunks (since LLMs do not attach any meaning to alphanumeric strings like the report ID), with the additional context of people, persons attached to it, along with the report ID, we can get the exact document chunks where these appear.

- If the user query is like “Tell me about the incident where car no. PYT1234 was involved?”, we get the Report ID(s) from the graph where this car no. is attached first, then for that Report ID, we get all the entities in that cluster, again providing the full context for chunk retrieval.

- The graph result derived from steps 1 or 2 is then provided to the LLM as context along with the user query to formulate an answer in natural language instead of the JSON generated by the cypher query or the node -> relation -> node format of the semantic match. In cases where the user query is asking for aggregated metrics or connected entities only (like Report IDs connected to a car), the LLM output usually is a good enough response to the user query at this stage. However, we retain this as an intermediate result called Graph context.

- Next the Graph context along with the user query is used to query the chunk embeddings and the closest chunks are extracted.

- We combine the Graph context with the chunks retrieved for a full Combined Context, which we provide to the LLM to synthesize the final response to the user query.

Note that in the above approach, we use the Graph as a classifier, to narrow the search space for the user query and find the relevant document clusters quickly, then use that as the context for chunk retrievals. This enables efficient and accurate retrievals from a large corpus, while at the same time providing the cross-entity and cross-document linkage capabilities that are native to a Graph database.

Challenges and Limitations

As with any architecture, there are constraints which become evident when put into practice. Some have been discussed above, like designing the graph balancing complexity and cost. A few others to be aware of are follows:

- As mentioned in the previous section, semantic retrieval of Graph nodes and relations can sometimes cause unpredictable results. Consider the case where you query for an entity that has not been extracted into the graph clusters. First the exact cypher match fails, which is expected, however, the fallback semantic match will anyway retrieve what it thinks are similar matches, although they are irrelevant to your query. This has the unexpected effect of creating an incorrect graph context, thereby retrieving incorrect document chunks and a response that is factually wrong. This behavior is worse than the RAG replying as ‘I don’t know‘ and needs to be firmly controlled by detailed negative prompting of the LLM while generating the Graph context, such that the LLM outputs ‘No record’ in such cases.

- Extracting all entities and relations in a single pass of the entire document, while building the graph with the LLM will usually miss several of them due to attention drop, even with detailed prompt tuning. This is because LLMs lose recall when documents exceed a certain length. To mitigate this, it is best to adopt a chunking-based entity extraction strategy as follows:

- First, extract the Report ID once.

- Then split the document into chunks

- Extract entities from chunk-by-chunk and because we are creating a star graph, attach the extracted entities to the Report ID

This is another reason why a star graph is a good starting point for building a graph.

- Deduplication and normalization: It is important to deduplicate names before inserting into the graph, so common entity linkages across multiple Report clusters are correctly created. For instance; Officer Johnson and Inspector Johnson should be normalized to Johnson before inserting into the graph.

- Even more important is normalization of amounts if you wish to run queries like “How many reports of fraud are there for amounts between 100,000 and 1 Million?”. For which the LLM will correctly create a cypher like (amount > 100000 and amount < 1000000). However, the entities extracted from the document into the graph cluster are typically strings like ‘5 Million’, if that is how it is present in the document. Therefore, these must be normalized to numerical values before inserting.

- The nodes should have the document name as a property so the grounding information can be provided in the result.

- Graph databases, such as Neo4j, provide an elegant, low-code way to construct, embed and retrieve information from a graph. But there are instances where the behavior is odd and inexplicable. For instance, during retrieval for some types of query, where multiple report clusters are expected in the result, a perfectly formed cypher query is formed by the LLM. This cypher fetches multiple record clusters when run in Neo4j browser correctly, however, it will only fetch one when running in the pipeline.

Conclusion

Ultimately, a graph that represents each entity and all relations present in the document precisely and in detail, such that it is able to answer any and all queries of the user with equally great accuracy is quite likely a goal too expensive to build and maintain. Striking the right balance between complexity, time and cost will be a critical success factor in a GraphRAG project.

It should also be kept in mind that while RAG is for extracting insights from unstructured text, the complete profile of an entity is typically spread across structured (relational) databases too. For instance, a person’s address, phone number, and other details may be present in an enterprise database or even an ERP. Getting a full, detailed profile of an event may require using LLMs to inquire such databases using MCP agents and combine that information with RAG. But that’s a topic for another article.

What’s Next

While I focussed on the architecture and design aspects of GraphRAG in this article, I intend to address the technical implementation in the next one. It will include prompts, key code snippets and illustrations of the pipeline workings, results and limitations mentioned.

It is worthwhile to think of extending the GraphRAG pipeline to include multimodal information (images, tables, figures) also for a complete user experience. Refer my article on building a true Multimodal RAG that returns images also along with text.

Connect with me and share your comments at www.linkedin.com/in/partha-sarkar-lets-talk-AI

Source link

Add comment