Large language models (LLMs) are now more accessible than ever. Google’s Gemini API offers a powerful and versatile tool for developers and creators. This guide explores the numerous practical applications you can utilize with the free Gemini API. We will walk through several hands-on examples. You will learn key Gemini API prompting techniques, from simple queries to complex tasks. We will cover methods like zero-shot prompting, Gemini, and few-shot prompting. You will also learn how to perform tasks such as basic code generation with Gemini, making your workflow more efficient.

How to Get Your Free Gemini API?

Before we begin, you need to set up your environment. This process is simple and takes just a few minutes. You will need a Google AI Studio API key to start.

First, install the necessary Python libraries. This package allows you to communicate with the Gemini API easily.

!pip install -U -q "google-genai>=1.0.0"Next, configure your API key. You can get a free key from Google AI Studio. Store it securely and use the following code to initialize the client. This setup is the foundation for all the examples that follow.

from google import genai

# Make sure to secure your API key

# For example, by using userdata in Colab

# from google.colab import userdata

# GOOGLE_API_KEY=userdata.get('GOOGLE_API_KEY')

client = genai.Client(api_key="YOUR_API_KEY")

MODEL_ID = "gemini-1.5-flash"Part 1: Foundational Prompting Techniques

Let’s start with two fundamental Gemini API prompting techniques. These methods form the basis for more advanced interactions.

Technique 1: Zero-Shot Prompting for Quick Answers

Zero-shot prompting is the simplest way to interact with an LLM. You ask a question directly without providing any examples. This method works well for straightforward tasks where the model’s existing knowledge is sufficient. For effective zero-shot prompting, clarity is the key.

Let’s use it to classify the sentiment of a customer review.

Prompt:

prompt = """

Classify the sentiment of the following review as positive, negative, or neutral:

Review: "I go to this restaurant every week, I love it so much."

"""

response = client.models.generate_content(

model=MODEL_ID,

contents=prompt,

)

print(response.text)Output:

This simple approach gets the job done quickly. It is one of the most common things you can do with the Gemini’s free API.

Technique 2: Few-Shot Prompting for Custom Formats

Sometimes, you need the output in a specific format. Few-shot prompting guides the model by providing a few examples of input and expected output. This technique helps the model understand your requirements precisely.

Here, we’ll extract cities and their countries into a JSON format.

Prompt:

prompt = """

Extract cities from the text and include the country they are in.

USER: I visited Mexico City and Poznan last year

MODEL: {"Mexico City": "Mexico", "Poznan": "Poland"}

USER: She wanted to visit Lviv, Monaco and Maputo

MODEL: {"Lviv": "Ukraine", "Monaco": "Monaco", "Maputo": "Mozambique"}

USER: I am currently in Austin, but I will be moving to Lisbon soon

MODEL:

"""

# We also specify the response should be JSON

generation_config = types.GenerationConfig(response_mime_type="application/json")

response = model.generate_content(

contents=prompt,

generation_config=generation_config

)

display(Markdown(f"```json\n{response.text}\n```"))Output:

By providing examples, you teach the model the exact structure you want. This is a powerful step up from basic zero-shot prompting Gemini.

Part 2: Guiding the Model’s Behavior and Knowledge

You can control the model’s persona and provide it with specific knowledge. These Gemini API prompting techniques make your interactions more targeted.

Technique 3: Role Prompting to Define a Persona

You can assign a role to the model to influence its tone and style. This makes the response feel more authentic and tailored to a specific context.

Let’s ask for museum recommendations from the perspective of a German tour guide.

Prompt:

system_instruction = """

You are a German tour guide. Your task is to give recommendations

to people visiting your country.

"""

prompt="Could you give me some recommendations on art museums in Berlin and Cologne?"

model_with_role = genai.GenerativeModel(

'gemini-2.5-flash-',

system_instruction=system_instruction

)

response = model_with_role.generate_content(prompt)

display(Markdown(response.text))Output:

The model adopts the persona, making the response more engaging and helpful.

Technique 4: Adding Context to Answer Niche Questions

LLMs don’t know everything. You can provide specific information in the prompt to help the model answer questions about new or private data. This is a core concept behind Retrieval-Augmented Generation (RAG).

Here, we give the model a table of Olympic athletes to answer a specific query.

Prompt:

prompt = """

QUERY: Provide a list of athletes that competed in the Olympics exactly 9 times.

CONTEXT:

Table title: Olympic athletes and number of times they've competed

Ian Millar, 10

Hubert Raudaschl, 9

Afanasijs Kuzmins, 9

Nino Salukvadze, 9

Piero d'Inzeo, 8

"""

response = model.generate_content(prompt)

display(Markdown(response.text))Output:

The model uses only the provided context to give an accurate answer.

Technique 5: Providing Base Cases for Clear Boundaries

It is important to define how a model should behave when it cannot fulfill a request. Providing base cases or default responses prevents unexpected or off-topic answers.

Let’s create a vacation assistant with a limited set of responsibilities.

Prompt:

system_instruction = """

You are an assistant that helps tourists plan their vacation. Your responsibilities are:

1. Helping book the hotel.

2. Recommending restaurants.

3. Warning about potential dangers.

If another request is asked, return "I cannot help with this request."

"""

model_with_rules = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=system_instruction

)

# On-topic request

response_on_topic = model_with_rules.generate_content("What should I look out for on the beaches in San Diego?")

print("--- ON-TOPIC REQUEST ---")

display(Markdown(response_on_topic.text))

# Off-topic request

response_off_topic = model_with_rules.generate_content("What bowling places do you recommend in Moscow?")

print("\n--- OFF-TOPIC REQUEST ---")

display(Markdown(response_off_topic.text))Output:

Part 3: Unlocking Advanced Reasoning

For complex problems, you need to guide the model’s thinking process. These reasoning techniques improve accuracy for multi-step tasks.

Technique 6: Basic Reasoning for Step-by-Step Solutions

You can instruct the model to break down a problem and explain its steps. This is useful for mathematical or logical problems where the process is as important as the answer.

Here, we ask the model to solve for the area of a triangle and show its work.

Prompt:

system_instruction = """

You are a teacher solving mathematical problems. Your task:

1. Summarize given conditions.

2. Identify the problem.

3. Provide a clear, step-by-step solution.

4. Provide an explanation for each step.

"""

math_problem = "Given a triangle with base b=6 and height h=8, calculate its area."

reasoning_model = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=system_instruction

)

response = reasoning_model.generate_content(math_problem)

display(Markdown(response.text))Output:

This structured output is clear and easy to follow.

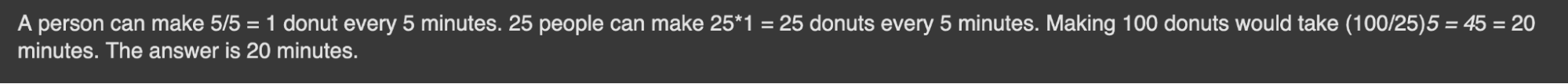

Technique 7: Chain-of-Thought for Complex Problems

Chain-of-thought (CoT) prompting encourages the model to think step by step. Research from Google shows that this significantly improves performance on complex reasoning tasks. Instead of just giving the final answer, the model explains its reasoning path.

Let’s solve a logic puzzle using CoT.

Prompt:

prompt = """

Question: 11 factories can make 22 cars per hour. How much time would it take 22 factories to make 88 cars?

Answer: A factory can make 22/11=2 cars per hour. 22 factories can make 22*2=44 cars per hour. Making 88 cars would take 88/44=2 hours. The answer is 2 hours.

Question: 5 people can create 5 donuts every 5 minutes. How much time would it take 25 people to make 100 donuts?

Answer:

"""

response = model.generate_content(prompt)

display(Markdown(response.text))Output:

The model follows the pattern, breaking the problem down into logical steps.

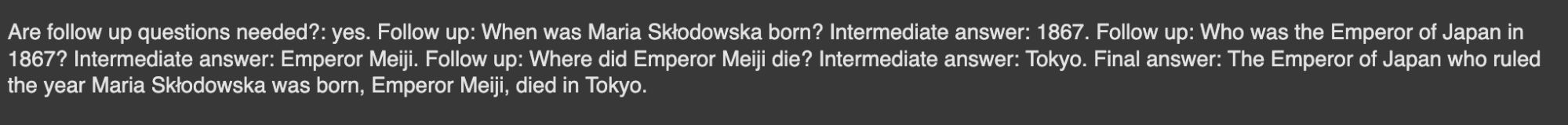

Technique 8: Self-Ask Prompting to Deconstruct Questions

Self-ask prompting is similar to CoT. The model breaks down a main question into smaller, follow-up questions. It answers each sub-question before arriving at the final answer.

Let’s use this to solve a multi-part historical question.

Prompt:

prompt = """

Question: Who was the president of the united states when Mozart died?

Are follow up questions needed?: yes.

Follow up: When did Mozart die?

Intermediate answer: 1791.

Follow up: Who was the president of the united states in 1791?

Intermediate answer: George Washington.

Final answer: When Mozart died George Washington was the president of the USA.

Question: Where did the Emperor of Japan, who ruled the year Maria Skłodowska was born, die?

"""

response = model.generate_content(prompt)

display(Markdown(response.text))Output:

This structured thinking process helps ensure accuracy for complex queries.

Part 4: Real-World Applications with Gemini

Now let’s see how these techniques apply to common real-world tasks. Exploring these examples shows the wide variety of things you can do with the Gemini’s free API.

Technique 9: Classifying Text for Moderation and Analysis

You can use Gemini to automatically classify text. This is useful for tasks like content moderation, where you need to identify spam or abusive comments. Using few-shot prompting, Gemini helps the model learn your specific categories.

Prompt:

classification_template = """

Topic: Where can I buy a cheap phone?

Comment: You have just won an IPhone 15 Pro Max!!! Click the link!!!

Class: Spam

Topic: How long do you boil eggs?

Comment: Are you stupid?

Class: Offensive

Topic: {topic}

Comment: {comment}

Class:

"""

spam_topic = "I am looking for a vet in our neighbourhood."

spam_comment = "You can win 1000$ by just following me!"

spam_prompt = classification_template.format(topic=spam_topic, comment=spam_comment)

response = model.generate_content(spam_prompt)

display(Markdown(response.text))Output:

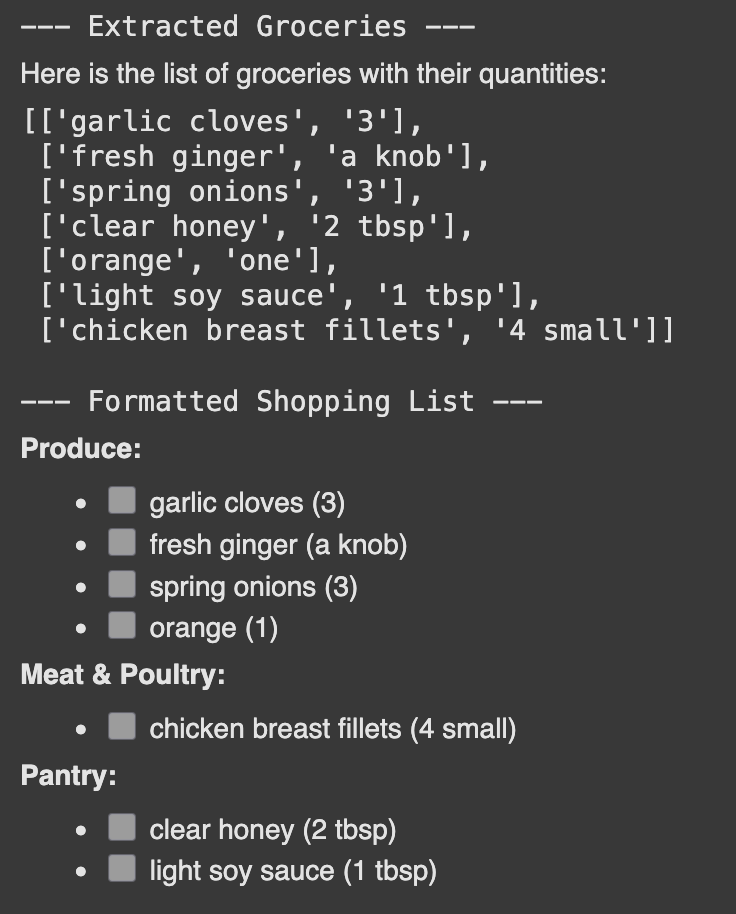

Gemini can extract specific pieces of information from unstructured text. This helps you convert plain text, like a recipe, into a structured format, like a shopping list.

Prompt:

recipe = """

Grind 3 garlic cloves, a knob of fresh ginger, and 3 spring onions to a paste.

Add 2 tbsp of clear honey, juice from one orange, and 1 tbsp of light soy sauce.

Pour the mixture over 4 small chicken breast fillets.

"""

# Step 1: Extract the list of groceries

extraction_prompt = f"""

Your task is to extract to a list all the groceries with its quantities based on the provided recipe.

Make sure that groceries are in the order of appearance.

Recipe:{recipe}

"""

extraction_response = model.generate_content(extraction_prompt)

grocery_list = extraction_response.text

print("--- Extracted Groceries ---")

display(Markdown(grocery_list))

# Step 2: Format the extracted list into a shopping list

formatting_system_instruction = "Organize groceries into categories for easier shopping. List each item with a checkbox []."

formatting_prompt = f"""

LIST: {grocery_list}

OUTPUT:

"""

formatting_model = genai.GenerativeModel(

'gemini-2.5-flash',

system_instruction=formatting_system_instruction

)

formatting_response = formatting_model.generate_content(formatting_prompt)

print("\n--- Formatted Shopping List ---")

display(Markdown(formatting_response.text))Output:

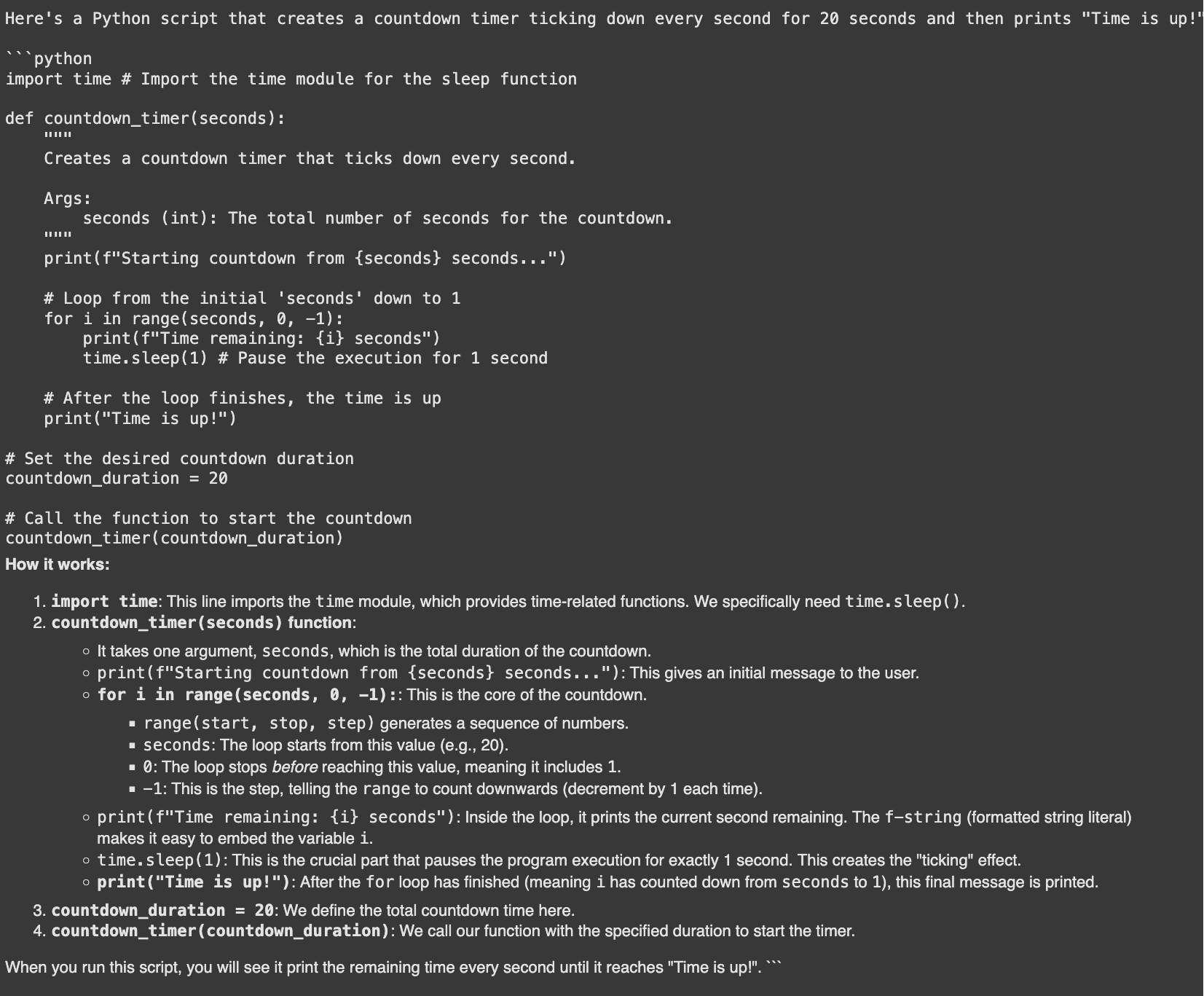

Technique 11: Basic Code Generation and Debugging

A powerful application is basic code generation with Gemini. It can write code snippets, explain errors, and suggest fixes. A GitHub survey found that developers using AI coding tools are up to 55% faster.

Let’s ask Gemini to generate a Python script for a countdown timer.

Prompt:

code_generation_prompt = """

Create a countdown timer in Python that ticks down every second and prints

"Time is up!" after 20 seconds.

"""

response = model.generate_content(code_generation_prompt)

display(Markdown(f"```python\n{response.text}\n```"))Output:

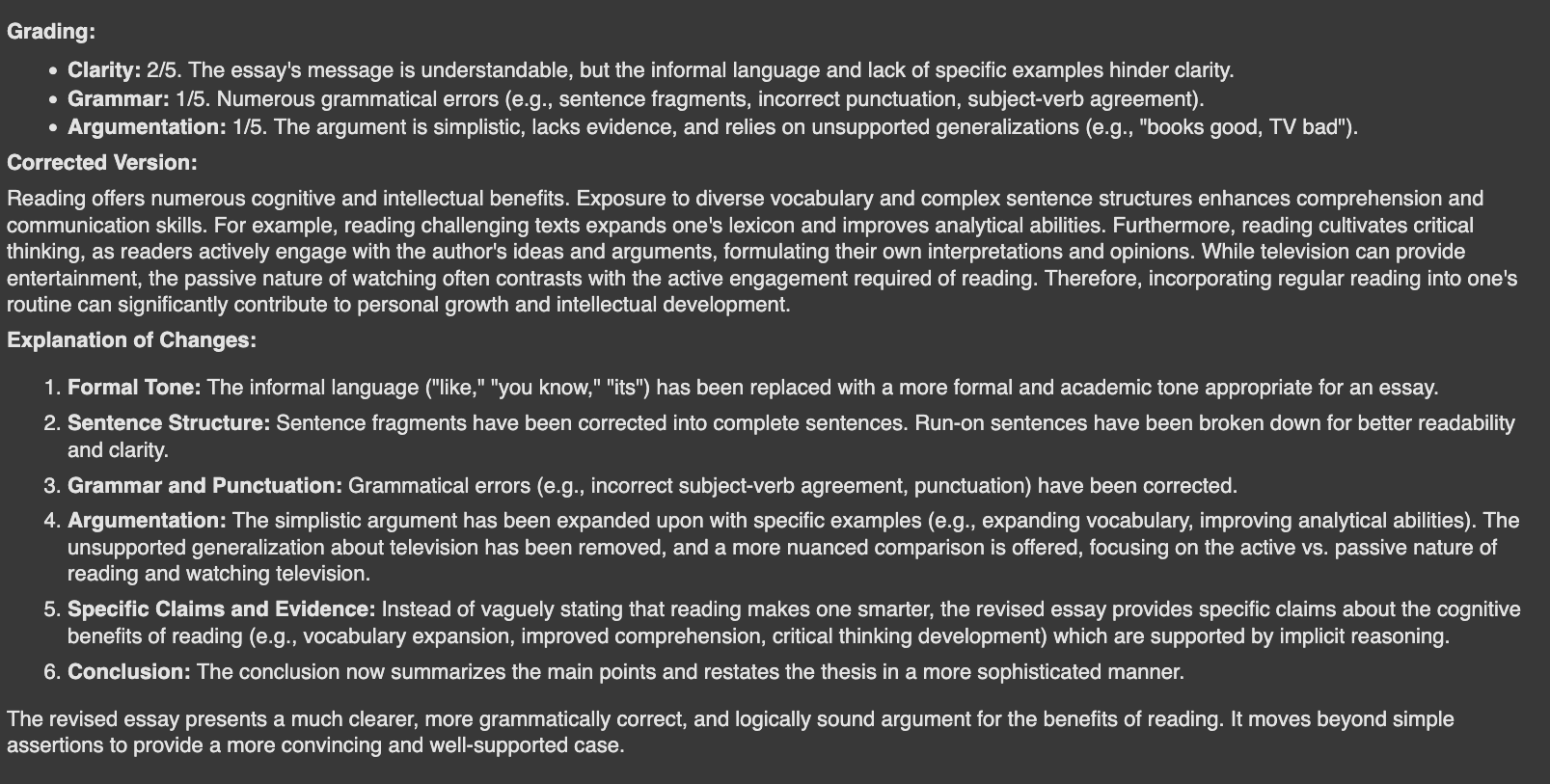

Technique 12: Evaluating Text for Quality Assurance

You can even use Gemini to evaluate text. This is useful for grading, providing feedback, or ensuring content quality. Here, we’ll have the model act as a teacher and grade a poorly written essay.

Prompt:

teacher_system_instruction = """

As a teacher, you are tasked with grading a student's essay.

1. Evaluate the essay on a scale of 1-5 for clarity, grammar, and argumentation.

2. Write a corrected version of the essay.

3. Explain the changes made.

"""

essay = "Reading is like, a really good thing. It’s beneficial, you know? Like, a lot. When you read, you learn new words and that's good. Its like a secret code to being smart. I read a book last week and I know it was making me smarter. Therefore, books good, TV bad. So everyone should read more."

evaluation_model = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=teacher_system_instruction

)

response = evaluation_model.generate_content(essay)

display(Markdown(response.text))Output:

Conclusion

The Gemini API is a flexible and powerful resource. We have explored a wide range of things you can do with the free Gemini API, from simple questions to advanced reasoning and code generation. By mastering these Gemini API prompting techniques, you can build smarter, more efficient applications. The key is to experiment. Use these examples as a starting point for your own projects and discover what you can create.

Frequently Asked Questions

A. You can perform text classification, information extraction, and summarization. It is also excellent for tasks like basic code generation and complex reasoning.

A. Zero-shot prompting involves asking a question directly without examples. Few-shot prompting provides the model with a few examples to guide its response format and style.

A. Yes, Gemini can generate code snippets, explain programming concepts, and debug errors. This helps accelerate the development process for many programmers.

A. Assigning a role gives the model a specific persona and context. This results in responses that have a more appropriate tone, style, and domain knowledge.

A. It is a technique where the model breaks down a problem into smaller, sequential steps. This helps improve accuracy on complex tasks that require reasoning.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment