We all should admit that September has been the month of Gemini Nano Banana. Everyone has been playing around with the model, posting quirky edits and fun selfies on social media. Chances are, you tried it too or at least scrolled past a dozen of those celebrity mashups and 3D figurine posts in your feed. But while Nano Banana has dominated the casual side of image generation, Qwen has been quietly leveling up the professional editing game. Just last month, the team released Qwen-Image-Edit, and now they’re back with an upgraded version: Qwen-Image-Edit-2509, that focuses on consistency, flexibility, and control. Compared to the August release, the new update makes editing more powerful for creators, developers, and researchers, while still staying accessible to anyone who wants to experiment.

If you want to know more about the previous model, read our detailed article on Qwen-Image-Edit!

What’s New in Qwen-Image-Edit-2509?

Compared to last month’s release, this version introduces four major improvements:

Multi-image Editing Support

Qwen-Image-Edit-2509 now allows you to edit across multiple images simultaneously. The model has been trained using image concatenation, enabling seamless edits across combinations like:

- person + person

- person + product

- person + scene

It works best with 1–3 input images, and also integrates well with ControlNet maps such as keypoints for pose changes.

Enhanced Single-image Consistency

When editing a single image, the September update focuses heavily on consistency:

- Person Editing: Faces retain their identity across different poses and portrait styles.

- Product Editing: Logos and objects maintain their look, making product posters easier to generate.

- Text Editing: Not only can you change the words, but you can also adjust fonts, colors, and materials with precision.

Native ControlNet Support

The update adds smooth integration with ControlNet inputs like depth maps, edge maps, and keypoint maps, expanding creative control for technical and artistic use cases.

Improved Text + Image Integration

Qwen-Image-Edit-2509 can now blend text editing seamlessly with image manipulation. For example, designing a poster where fonts, styles, and visuals interact consistently.

Also Read: Qwen3-Omni Review: Multimodal Powerhouse or Overhyped Promise?

Hands On Prompts to Try with Qwen-Image-Edit-2509

For all these tasks, I am using both Qwen-Image-Edit-2509 and Nano Banana to compare the outputs and also see how Qwen performs on each task.

Task 1: Person + Person

Prompt: “Use image A and image B. Merge them into one photo where both people sit on a park bench. Preserve face identity and natural lighting.”

The new feature of the Qwen Image Edit model still struggles to keep facial features intact. As you can see in the image above, Nano Banana did a better job of retaining the faces of both people. Qwen also altered the bag in the original image, while Nano Banana kept it unchanged.

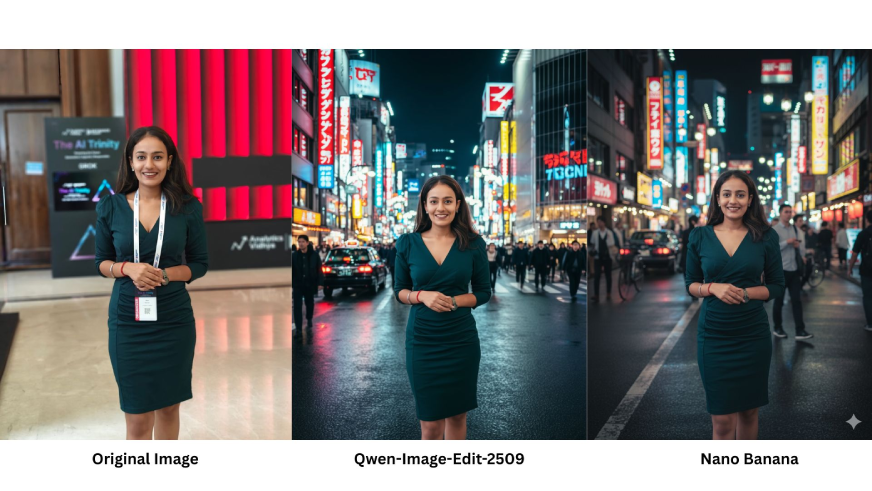

Task 2: Person + Scene

Prompt: “Place this portrait into a busy Tokyo street at night with neon signs. Keep the face identity and correct skin tone.”

Qwen did a great job in retaining the facial expressions and overall image quality. The lightning and overall image blend looks good. However, Nano Banana went one step ahead and edited the background lightning as per the photo causing it to look more real.

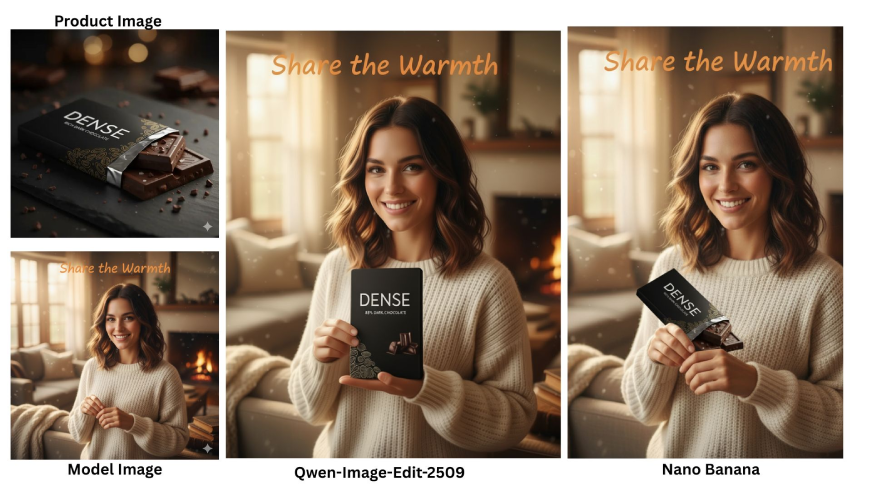

Task 3: Person + Product Ad

Prompt: “Use this portrait and this product image. Make it look like an ad where the person holds the product. Keep product label readable.”

For this task, I added the images of both the model and the product into both models using the same prompt. The output from Qwen looks better, while Nano Banana simply placed the two photos together without much thought in combining them.

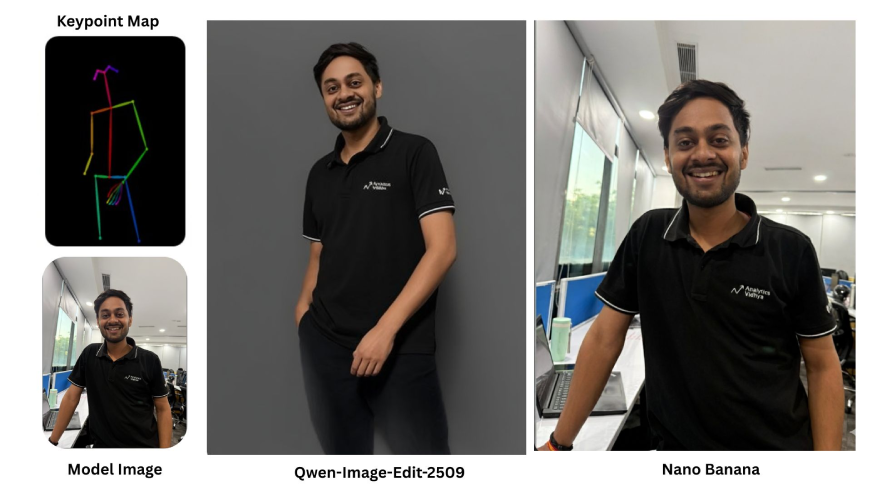

Task 4: Pose Control with Keypoint Map

The following images shows the process where a keypoint pose map from one image is used to transfer a specific posture to a person in a different image, all while preserving their facial identity. The examples also show how this technology can be combined with other complex editing tasks, such as changing the background and manipulating objects.

I have used this new feature of Qwen in the following prompt:

Prompt: “Use this portrait and this keypoint pose map. Repose the person to match the map while keeping facial identity.”

Qwen Image Edit has explicit support for keypoint-guided editing. That’s why your sketch + keypoint overlay synced perfectly. It knows how to read the keypoint map, then condition the generation. If you want to play with poses, angles, posture, or interactions with landmarks (like Eiffel Tower, Qutub Minar, Taj Mahal, Akshardham), you should stick with Qwen Image Edit (or any model that mentions “pose control” / “keypoint conditioning”).

However, Nano Banana doesn’t have a keypoint-map conditioning pipeline. That’s why it just re-emitted your input image without changes: it can edit for style, texture, background swaps, etc., but it doesn’t parse or enforce human/object pose maps.

Task 5: Adding Text to Image

Prompt: “The girl in the uploaded image is holding a chalk board with the text “Join my GenAI Masterclass to Become an Expert in 2025″ written on it”

Between the two responses, Qwen’s output is clearly better. In the Qwen version, the text sits naturally on the board, aligned properly with the perspective, and looks like it actually belongs on a chalkboard. The font style is consistent, clean, and highly readable, which makes the message come across more clearly. The text blends seamlessly with the board surface, so the final result feels authentic and realistic.

On the other hand, Gemini’s response looks more like a digital overlay. The text alignment doesn’t fully match the angle of the board, and the font style appears less like chalk writing and more like standard digital text. Because of this, the text feels like it is pasted on top of the board rather than integrated into it.

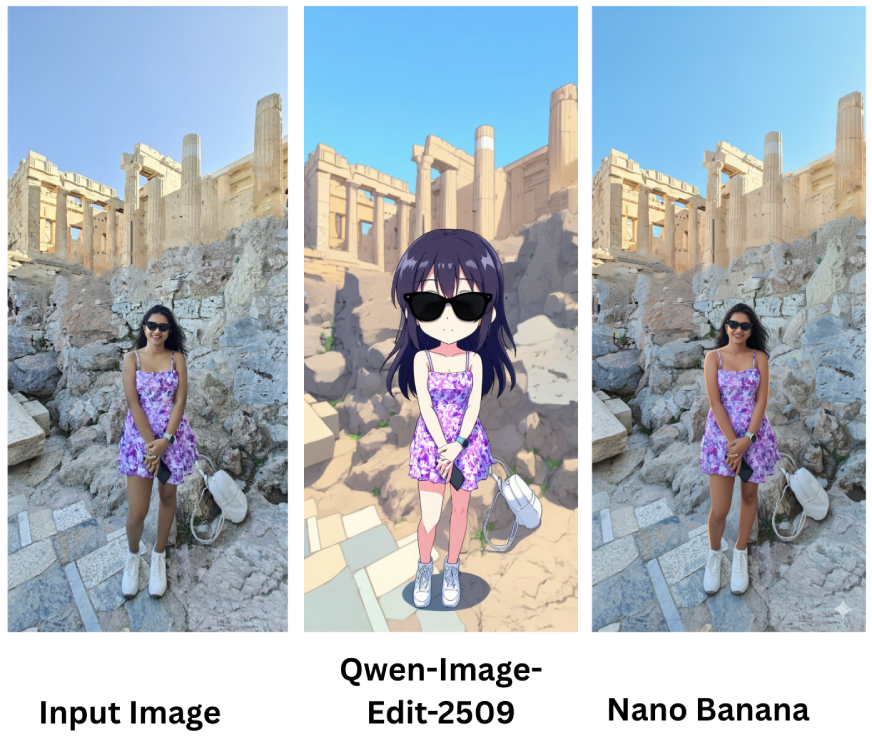

Task 6: Cartoon or Anime Variant

Prompt: “Turn this picture into an anime style character while preserving the same face identity and expression.”

Between the two results, Qwen-Image-Edit-2509’s version is clearly better because it fully transforms the person into an anime-style character while retaining the same outfit, pose, and expression, making it faithful to the task prompt, whereas Nano Banana’s version looks almost identical to the input photo with very little anime-stylization, so it doesn’t really fulfill the “anime character” requirement and feels more like a light retouch than a transformation.

Task 7: Product Poster

Prompt: “Make a clean marketable poster for this shoe. Use a plain background. Add the product name at the bottom in bold type saying – SoulShoe”

Between the two, Qwen’s poster is better because it goes beyond a plain showcase and actually markets the product. The glowing effect makes the shoes stand out, the tagline “Where Comfort Meets Style” adds emotional appeal, and the “Limited Edition” badge creates urgency, all of which make it look like a polished ad campaign, whereas Gemini’s output, though clean and professional, feels more like a simple catalog image than a marketable poster.

My Verdict

Nano Banana is fun, accessible, and instantly shareable; perfect for memes, selfies, and social trends. Qwen, on the other hand, is structured, versatile, and production-ready, making it the better choice for creators, designers, and professionals who want reliability in outputs.

Also Read:

Conclusion

AI image models are improving month by month. August gave us the first Qwen Image. September has given us this new version with multi image support and better consistency. Next month who knows what will come. If you love experimenting then open Qwen Chat and try the prompts I shared. Start with something simple like placing yourself in a new background. Then move to multi image edits and posters.

I will keep testing and sharing what works. If you try any of these prompts let me know what result you got. Sometimes the fun is in the surprises.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment