In the past year, searches for “AI video maker” have skyrocketed by over 200%. This isn’t a shocker, as creators everywhere are looking for faster, smarter ways to turn ideas into visuals. In fact, the very idea of such AI tools was to provide creative freedom to everyone, whether with a videomaking background or not. Into this wave comes Luma AI Ray3, the newest video-generation model from Luma Labs.

Positioned as a “visual thought partner,” Ray3 is built to help creators ideate, storyboard, and refine their videos within a single interface. At least that’s what the company aims at, as per its launch announcement. Powered by Ray3 for video and Photon for images, the system aims to push video creation from experimentation to execution, all in minutes.

I tried my hand at the new Ray3. Here, I share all about it, my experience, and how you can try it out for yourself. Read on!

What is Luma AI Ray3?

At its core, Luma AI Ray3 is the latest upgrade to Luma Labs’ video-generation model, designed to push past the limits of Ray2. Unlike earlier iterations that focused mostly on rendering short clips, Ray3 introduces a unified creative layer: you can ideate, experiment, and direct visual outputs without leaving the interface.

The system combines two engines under one roof – Ray3 for video and Photon for images. This pairing means you can move fluidly between still images and motion, treating them as parts of the same creative process.

Yet, the most interesting feature Ray3 brings is its reference-driven consistency. The new AI video-gen model lets you lock styles or characters across multiple shots, something many other AI video makers still struggle with.

Key Features of Luma AI Ray3

Other than the reference-driven video-making, Ray3 has a host of new features. Here is a deep dive into all of these.

1. Boards for Structured Creativity

Ray3 introduces multiple boards – Artboard, Storyboard, and Moodboard. These let creators organise raw ideas into structured visuals. Instead of jumping straight into video, you can shape the look and feel before committing to generation.

2. Modify with Natural Language

Need quick changes? Simply type instructions like “make it anime” and the model edits your image or video accordingly. This bridges the gap between professional editing tools and everyday creative language.

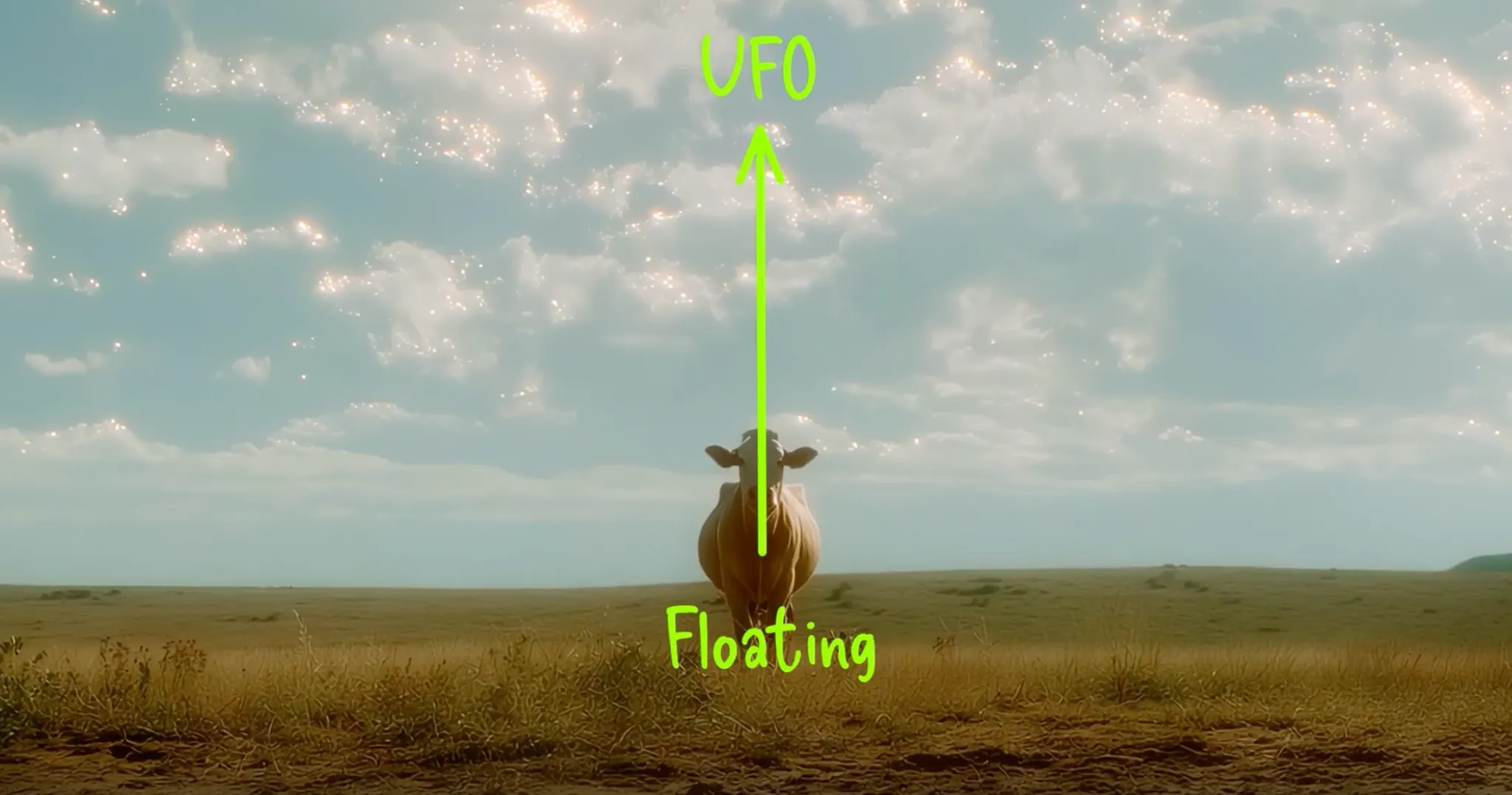

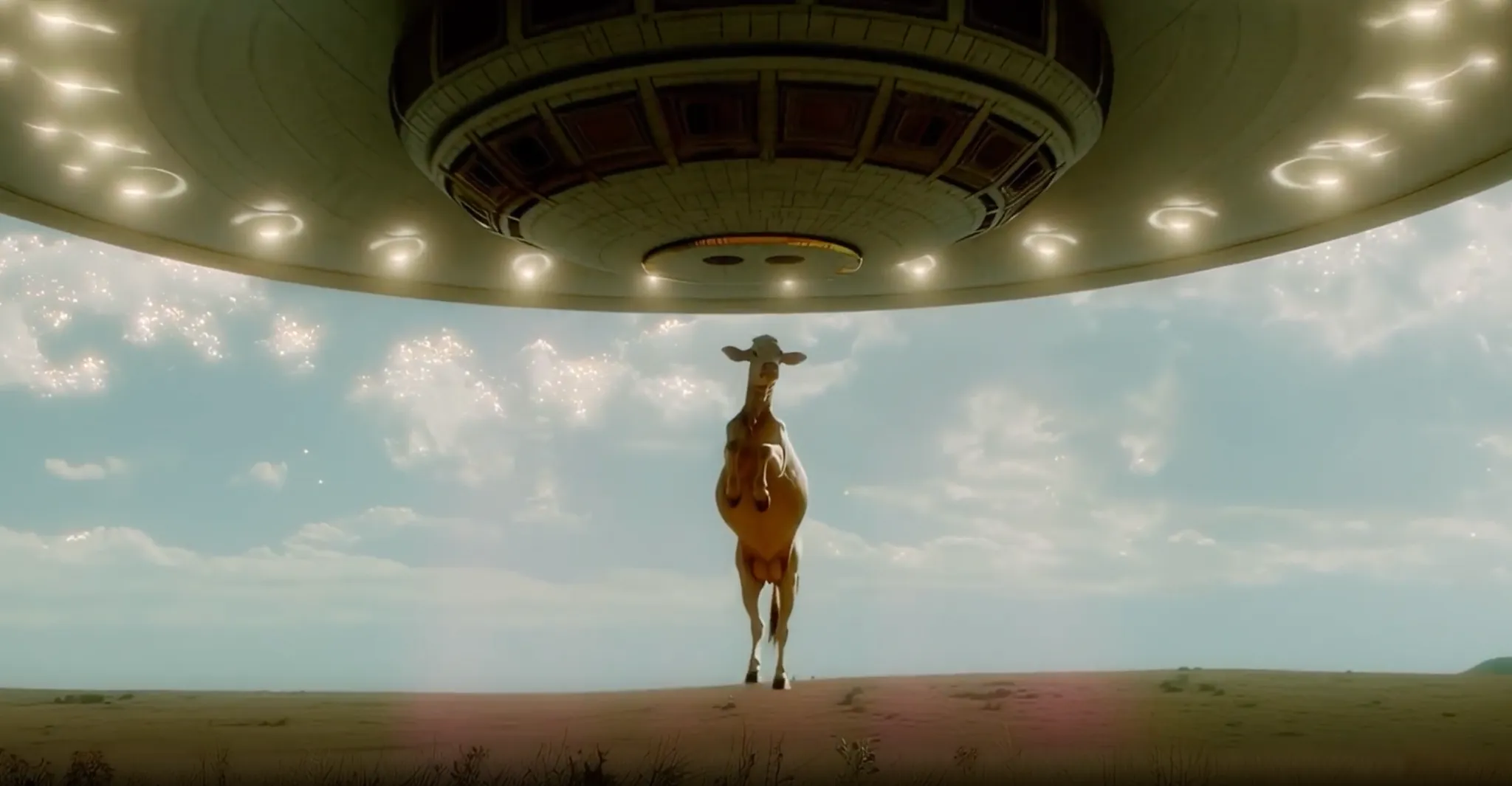

3. Modify with Visual Cues

One of the most novel features I have personally seen in an AI video maker, Ray3, now lets you modify a video by adding visual cues to it. The AI model is able to interpret these cues and make changes to your video accordingly.

4. Reference for Style and Character Consistency

One of the most powerful additions: reference-based generation. By uploading a visual reference, Ray3 can maintain consistent styles, environments, or even recurring characters across multiple outputs. Most AI video makers still lack this ability.

5. Keyframe Control

Directors often think in terms of keyframes, and Ray3 embraces that. By defining a start and end frame, you can let the AI fill in the in-between motion, adding a layer of control and predictability to generative video.

6. Brainstorm and Creative Query

Beyond video generation, Ray3 doubles as an idea partner. Its query system can expand rough prompts into detailed creative directions, preventing creative blocks.

7. Share & Remix

Every project comes with a built-in “behind the scenes” layer. Other creators can see your process, remix your outputs, and build collaboratively, making Ray3 as much a community tool as a production engine.

8. HDR Generation

Ray3 claims to be the world’s first model that is able to deliver studio-grade HDR videos through native high dynamic range color generation. The AI video tool is able to generate stunning HDR videos from text prompts, SDR images, as well as SDR videos. What’s more, it can even export these as 16-bit EXR for seamless integration into pro workflows.

How Does It Work?

Most AI video makers (see the top 10 here) generate clips in isolation, but Luma AI Ray3 combines multiple models and controls into one pipeline. At the core are two engines working together:

- Ray3 (video model): Generates motion sequences, interprets prompts, and handles transitions between frames.

- Photon (image model): Produces high-quality stills, references, and starting points that can flow directly into video creation.

- Here’s how the process unfolds when you use Ray3:

- Prompting or Referencing: You begin with either a text prompt (“make it anime”) or a visual reference (an image, a style, or a character).

- Scene Generation: Photon generates the stills, while Ray3 animates them into smooth motion, applying lighting, textures, and camera effects.

- Keyframe Direction: If you provide a start and end frame, Ray3 fills in the in-betweens, simulating how a director would plan shots.

- Edits and Modifications: You can iterate instantly using natural language, from style swaps to environmental changes, without touching traditional editing software.

- Collaboration Layer: Your outputs and processes can be shared, remixed, or extended by other creators on the platform.

How to Access Luma AI Ray3

Time needed: 5 minutes

To get started with Luma AI Ray3, head over to the Dream Machine platform, where Ray3 is currently available alongside Photon, the company’s image model. You’ll need to create an account, after which you can explore Ray3’s workspace directly in your browser. Simply follow these steps:

- Visit the Dream Machine platform of Luma Labs here.

- Create an account or sign in using an existing one.

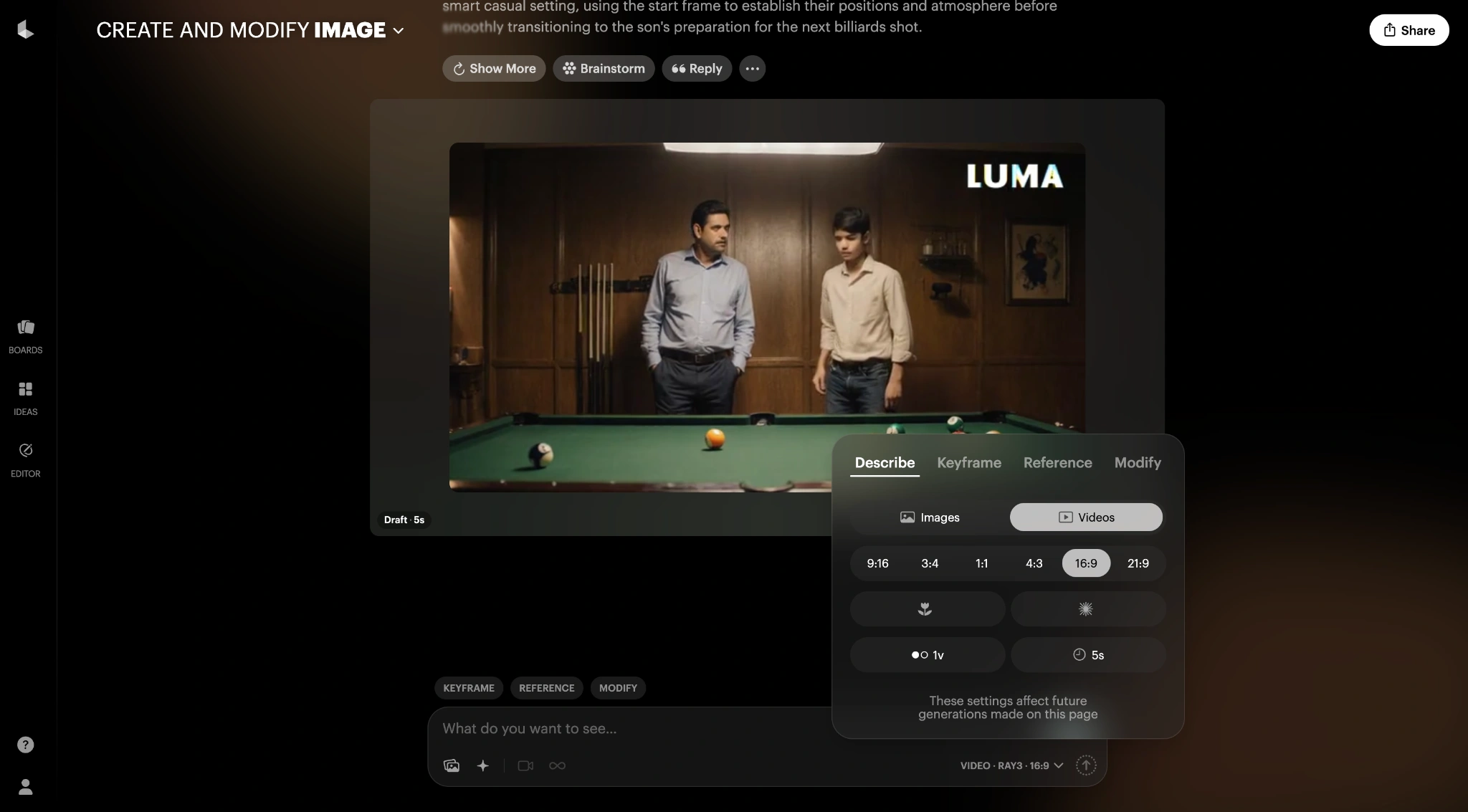

- You will see 3 options on the screen to choose from: Create and Modify using an image, Keyframe with Ray3, and Modify a video.

- To make a new video, click on the Create and Modify option. It will lead you to a storyboard.

- Here, you can enter your prompt to generate the video, as well as add a reference image to generate the video around.

- On the bottom right of the prompt window, make sure you select the Ray3 model and all the video settings as required.

- Once done with the prompt, simply press enter and put the AI to work.

- Your 5-second video will be generated in around 2 to 3 minutes. You can then download/ share it, or modify it further using simple prompts in natural language.

At the moment, Dream Machine is available on both the web and iOS. Ray3, however, seems to be in a trial mode at present on both.

Ray3 AI Video Maker: Hands-on

We tried the Ray3 for a novel video around a mystical theme using the following prompt:

Generate a sweeping cinematic sequence of a lone wanderer – a tall, cloaked figure with glowing amber eyes – crossing an endless ocean of clouds on a floating obsidian bridge. Each step sends faint ripples of light through the bridge, as if it’s alive. The camera glides outward to reveal colossal celestial beings carved of living starlight, suspended in the sky like ancient guardians.

Their silhouettes stretch across the heavens, crowned with halos of orbiting moons. As the traveler moves forward, the beings slowly turn their heads, illuminating the path with cascades of golden cosmic fire.

The traveler stops at the edge of the bridge, cloak billowing, as the scene erupts in a symphony of auroras, shooting stars, and resonant cosmic hums.The mood is epic, surreal, and awe-inspiring – a visual wonder that blends fantasy, sci-fi, and dreamlike myth.

Here is the output that the AI tool generated:

As you can see, the video is quite exceptional in terms of quality. It managed to envision a whole new scenario as mentioned in the prompt, and came up with quite a nuanced and accurate video accordingly. It even tried to incorporate the finer details within the prompt, like the lighting at the feet of the figure as he walks on the bridge, as well as his amber red eyes.

Having said that, I can see some inconsistencies in the video too. Some of the objects at the far end are randomly changing shapes throughout the video, while the celestial beings shown are performing totally random acts. Even the hooded figure is walking a bit strangely, with his arm totally disappearing from vision after a point.

Ray3 AI Video Maker: My Experience

I tried several other videos too, using simple text generation prompts as well as some with reference images. While Ray3 is able to catch the general purpose of the video quite well, it mostly tends to fail in the nuances of things and the finer details. A video of a fighter jet flying over Noida, for instance, turned out a totally weird output. The entire shot was shaky, and the jet changed shape midway through the video. Same with a video of an Indian father-son duo playing billiards (pool). The balls on the pool table seemed to appear out of mid-air and then disappear just as abruptly.

I did some research on how Luma Labs best suggests the use of Ray3. As per the company, the model works best with “short, natural language, simple prompts. While Ray3 tries to be as accurate as possible to the prompt given, it is most comfortable with simple and shorter prompts that are highly specific. This also means the model is better suited for videos around objects and environments that have been envisioned before. Give it something totally unique and tends to fumble in the dark.

Where it shines is the simplest of animations around a reference image. Simply upload the image you want to animate, and it will give you a high-quality video output, around the same, even without the need for a prompt. Case in point, if you upload an image of a dog, it will animate it with the dog shaking its head or wagging its tail. No prompt needed. In case there are changes, you can then mention the same through follow-up prompts, and Ray3 will get those done too. Pretty straightforward, and very handy.

Conclusion

While Ray3 is impressive, I feel it still has a long way to go as an ideal AI video maker. The list of new features it now offers is, no doubt, extremely handy. Animating an image, adding a keyframe, or even modifying a video using natural language or visual cues are gamechangers in many ways. As is its ability to come up with HDR videos in the paid version.

But to call it a perfect AI video generation tool, there is going to be a while in that.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment