Introduction

(RAG) solutions are everywhere. Over the past few years, we have seen them grow rapidly as organizations use RAG or hybrid-RAG solutions in customer service, healthcare, intelligence, and more. But how do we evaluate these solutions? And what methods can we use to determine the strengths and weaknesses of our RAG models?

This article will give an introduction to RAG by building our own chatbot with open-source research data using LangChain and more. We will also leverage DeepEval to evaluate our RAG pipeline for both our retriever and generator. Lastly, we will discuss methods for human-testing RAG solutions.

Retrieval-Augmented Generation

With the emergence of LLMs, lots of criticisms were raised when these “base” pre-trained models would give incorrect answers, despite being trained on massive datasets. With that came Retrieval-Augmented Generation (RAG), a combination of search and generation capabilities that reference context-specific information before generating a response.

RAG has become very popular over the last few years due to its ability to reduce hallucinations and improve factuality. They are flexible, easy to update, and much cheaper than fine-tuning LLMs. We come across RAG solutions on a daily basis now. For example, many organizations have leveraged RAG to build internal chatbots for employees to navigate their knowledge base, and external chatbots to support customer service and other business functions.

Building a RAG Pipeline

For our RAG solution, we will use abstracts from open-source research related to artificial intelligence. We can use this data to generate more “technical” answers when asking questions related to artificial intelligence, machine learning, etc.

The data used comes from the OpenAlex API (https://openalex.org/). This is a dataset/catalogue of open-source research from around the world. The data is freely accessible under a No Rights Reserved license (CC0 license).

Data Ingestion

First, we need to load our data using the OpenAlex API. Below is code to conduct searches by publication year and key terms. We run a search for AI/ML using key terms like “deep learning”, “natural language processing”, “computer vision”, etc.

import pandas as pd

import requests

def import_data(pages, start_year, end_year, search_terms):

"""

This function is used to use the OpenAlex API, conduct a search on works, a return a dataframe with associated works.

Inputs:

- pages: int, number of pages to loop through

- search_terms: str, keywords to search for (must be formatted according to OpenAlex standards)

- start_year and end_year: int, years to set as a range for filtering works

"""

#create an empty dataframe

search_results = pd.DataFrame()

for page in range(1, pages):

#use paramters to conduct request and format to a dataframe

response = requests.get(f'https://api.openalex.org/works?page={page}&per-page=200&filter=publication_year:{start_year}-{end_year},type:article&search={search_terms}')

data = pd.DataFrame(response.json()['results'])

#append to empty dataframe

search_results = pd.concat([search_results, data])

#subset to relevant features

search_results = search_results[["id", "title", "display_name", "publication_year", "publication_date",

"type", "countries_distinct_count","institutions_distinct_count",

"has_fulltext", "cited_by_count", "keywords", "referenced_works_count", "abstract_inverted_index"]]

return(search_results)

#search for AI-related research

ai_search = import_data(30, 2018, 2025, "'artificial intelligence' OR 'deep learn' OR 'neural net' OR 'natural language processing' OR 'machine learn' OR 'large language models' OR 'small language models'")When querying the OpenAlex database, the abstracts are returned as an inverted index. Below is a function to undo the inverted index and return the original text of the abstract.

def undo_inverted_index(inverted_index):

"""

The purpose of the function is to 'undo' and inverted index. It inputs an inverted index and

returns the original string.

"""

#create empty lists to store uninverted index

word_index = []

words_unindexed = []

#loop through index and return key-value pairs

for k,v in inverted_index.items():

for index in v: word_index.append([k,index])

#sort by the index

word_index = sorted(word_index, key = lambda x : x[1])

#join only the values and flatten

for pair in word_index:

words_unindexed.append(pair[0])

words_unindexed = ' '.join(words_unindexed)

return(words_unindexed)

#create 'original_abstract' feature

ai_search['original_abstract'] = list(map(undo_inverted_index, ai_search['abstract_inverted_index']))Create a Vector Database

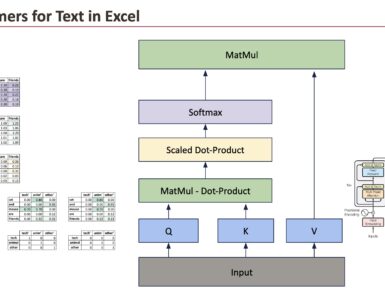

Next, we need to generate embeddings to represent the abstracts, and store them in a vector database. It is a best practice to leverage vector databases as they are designed for low-latency queries and can scale to handle billions of data points. They also use specialized indexing and nearest neighbor algorithms to quickly retrieve data based on contextual and/or semantic similarity, making them essential for LLM applications.

First, we import the necessary libraires from LangChain and load our embedding model from HuggingFace. While we can probably get better results using larger embedding models, I decided to use a smaller model to emphasize speed in this pipeline.

You can find and compare embedding models based on their size, performance, intended use, etc. by using the MTEB leaderboard from Hugging Face (https://huggingface.co/spaces/mteb/leaderboard).

from langchain_community.docstore.in_memory import InMemoryDocstore

from langchain_community.vectorstores import FAISS

from langchain_huggingface.embeddings import HuggingFaceEmbeddings

from langchain_core.documents import Document

#load embedding model

embeddings = HuggingFaceEmbeddings(model_name="thenlper/gte-small")Next, we create our vector database using FAISS (or LangChain’s wrapper for FAISS). We start by creating the index and formatting our data and documents, while also storing their metadata (title and year). We then create a list of IDs, add the documents and IDs to the database, and save the database locally.

#save index with faiss

index = faiss.IndexFlatL2(len(embeddings.embed_query("hello world")))

#format abstracts as documents

documents = [Document(page_content=ai_search['original_abstract'][i], metadata={"title": ai_search['title'][i], "year": ai_search['publication_year'][i]}) for i in range(len(ai_search))]

#create list of ids as strings

n = len(ai_search)

ids = list(range(1, n + 1))

ids = [str(x) for x in my_list]

#add documents to vector store

vector_store.add_documents(documents=documents, ids=ids)

#save the vector store

vector_store.save_local("Data/faiss_index")With LangChain’s vector stores, we can query our documents directly. Let’s quickly test this by searching for “computer vision”. We can see below that the first document returned , “Face Detection and Recognition Using OPENCV”, is highly-related to computer vision.

#test that vector database is working

vector_store.similarity_search("computer vision", k=3)

[Document(id='783', metadata={'title': 'FACE DETECTION AND RECOGNITION USING OPENCV', 'year': 2020}, page_content='Computer Vision is one of the most fascinating and challenging tasks in the field of Artificial Intelligence.Computer Vision serves as a link between computer software and the visuals we see around us.It enables...

Create RAG Pipeline

Now let’s develop our RAG pipeline. A major component of a RAG solution is the generative model leveraged to generate the responses. For this, we will use OpenAI’s model from LangChain.

So we can compare the response before and after we implement the RAG pipeline, let’s ask the “base” model: “What are the most recent advancements in computer vision?”.

from langchain_openai import OpenAI

from langchain.chains import RetrievalQA

from langchain import PromptTemplate

#set API key

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY","API KEY")

#load llm

llm = OpenAI(openai_api_key=OPENAI_API_KEY)

#test llm response

llm.invoke("What are the most recent advancements in computer vision?")‘\n\n1. Deep Learning: Deep learning, a subset of machine learning, has shown significant progress in computer vision tasks such as object detection, recognition, and image classification. It uses neural networks with multiple hidden layers to learn and extract features from images, leading to more accurate and efficient results.\n\n2. Generative Adversarial Networks (GANs): GANs are a type of deep learning algorithm that generates new images by learning from a large dataset. They have been used in tasks such as image synthesis, super-resolution, and image-to-image translation, and have shown impressive results in creating realistic images.\n\n3. Convolutional Neural Networks (CNNs): CNNs are a type of deep learning algorithm that has revolutionized the field of computer vision. They are highly effective in extracting features from images and have been used in various tasks such as image classification, object detection, and segmentation.\n\n4. Transfer Learning: Transfer learning allows a pre-trained model to be used on a different task or dataset without starting from scratch. It has shown promising results in computer vision tasks, especially for tasks with limited training data.\n\n5. Image Segmentation: With advancements in deep learning, image segmentation has become more accurate and efficient. It involves dividing an image into different regions or segments to identify objects’

From the response above, we can see a general summary of computer vision, a high-level description of how it works, and different types of models and applications. While it is a good summary, is does not directly answer our question. A great opportunity for RAG!

Next, we will build the components for our RAG pipeline. First we need a retriever to grab the top k documents related to our query. We then build a prompt instructing our model how to respond to questions. Lastly, we combine them with the base generative model to create our pipeline.

Let’s quickly retest our query of “What are the most recent advancements in computer vision?”.

#test that vector database is working

retriever = db.as_retriever(search_kwargs={"k": 3})

#create a prompt template

template = """<|user|>

Relevant information:

{context}

Provide a concise answer to the following question using relevant information provided above:

{question}

If the information above does not answer the question, say that you do not know. Keep answers to 3 sentences or shorter.<|end|>

<|assistant|>"""

#define prompt template

prompt = PromptTemplate(

template=template,

input_variables=["context", "question"])

#create RAG pipeline

rag = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=retriever, return_source_documents=True,

chain_type_kwargs={"prompt": prompt}, verbose = True)

#test rag response

rag.invoke("What are the most recent advancements in computer vision?")The most recent advancements in computer vision include the emergence of large language models equipped with vision capabilities, such as OpenAI’s GPT-4V, Google’s Bard AI, and Microsoft’s Bing AI. These models are able to analyze images and have the ability to access real-time information, making them directly embedded in many applications. Further advancements are expected as AI continues to rapidly evolve.

This response does a much better job at answering our question. It directly addresses the most recent advancements by calling out specific capabilities, models, and how they contribute to advancing computer vision. This is a promising result for our technical chatbot.

But this is not a proper evaluation. Next, we will further test our RAG solution on a number of metrics to help us determine if its ready for production.

LLM-as-a-Judge

To begin evaluating our solution, we will use another generative model to determine how our RAG solution meets certain criteria. While LLM-as-a-Judge methods have some caveats and need to be used carefully, they offer a lot of flexibility and efficiency. They can also give detailed insights during the evaluation process, as you will see below.

Our RAG consists of 2 main components, the retriever and generator. We will evaluate these components separately. Our findings could prompt us to tune hyperparameters, replace the embedding model, or use a difference generative model.

Retriever Evaluation

First we will evaluate our retriever, the component that fetches the relevant content. We will judge on 3 metrics:

- Contextual Precision: Represents a greater ability of the retrieval system to correctly rank relevant nodes. It first uses an LLM to determine whether each node is relevant to the input, before calculating the weighted cumulative precision.

- Contextual Recall: Represents a greater ability of the retrieval system to capture all relevant information from the total available relevant set within your knowledge base.

- Contextual Relevancy: Evaluates the overall relevance of the information presented for the given output.

First, we import the libraries and initialize the metrics.

from deepeval import evaluate

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

from deepeval.metrics import (

ContextualPrecisionMetric,

ContextualRecallMetric,

ContextualRelevancyMetric)

#set your OpenAI API key

os.environ["OPENAI_API_KEY"] = "API KEY"

# Initialize metrics

contextual_precision = ContextualPrecisionMetric()

contextual_recall = ContextualRecallMetric()

contextual_relevancy = ContextualRelevancyMetric()Next, we need to build a test case, an expected output to a given query. These testing datasets can be difficult to build, and should have input from domain experts who understand the questions that may be asked, and what those answers should be.

For this example, we will just create one test case with a mock expected output. This will not give us the true result, but will give us a result as an example.

#define user query

input = 'What are the most recent advancements in computer vision?'

#RAG output

actual_output = rag.invoke(input)['result']

#contexts used from the retriver

retrieved_contexts = []

for el in range(0,3):

retrieved_contexts.append(rag.invoke(input)['source_documents'][el].page_content)

#expected output (example)

expected_output = 'Recent advancements in computer vision include Vision-Language Models (VLMs) that merge vision and language, Neural Radiance Fields (NeRFs) for 3D scene generation, and powerful Diffusion Models and Generative AI for creating realistic visuals. Other key areas are Edge AI for real-time processing, enhanced 3D vision techniques like NeRFs and Visual SLAM, advanced self-supervised learning methods, deepfake detection systems, and increased focus on Ethical AI and Explainable AI (XAI) to ensure fairness and transparency.'With the components above, we can now build our test case and compute our 3 metrics.

#create test case

test_case = LLMTestCase(

input=input,

actual_output=actual_output,

retrieval_context=retrieved_contexts,

expected_output=expected_output)

#compute contextual precision and print results

contextual_precision.measure(test_case)

print("Score: ", contextual_precision.score)

print("Reason: ", contextual_precision.reason)

#compute contextual recall and print results

contextual_recall.measure(test_case)

print("Score: ", contextual_recall.score)

print("Reason: ", contextual_recall.reason)

#compute relevancy precision and print results

contextual_relevancy.measure(test_case)

print("Score: ", contextual_relevancy.score)

print("Reason: ", contextual_relevancy.reason)Score: 1.0 Reason: The score is 1.00 because the relevant nodes are ranked at the top: the first node discusses ‘recent progress on computer vision algorithms’ and ‘prominent achievements,’ and the second node covers the ‘evolution of computer vision’ and foundational advancements. The irrelevant node, which only describes the OpenCV toolkit and lacks discussion of recent advancements, is correctly ranked last. This perfect ordering ensures the highest contextual precision.

Score: 0.0 Reason: The score is 0.00 because none of the sentences in the expected output can be traced back to any node(s) in the retrieval context; there is no overlap or relevant information present.

Score: 0.5555555555555556 Reason: The score is 0.56 because, while there are several statements that discuss recent progress and deep learning advancements in computer vision (e.g., ‘The prominent achievements in computer vision tasks such as image classification, object detection and image segmentation brought by deep learning techniques are highlighted.’), much of the context is general background or unrelated details (e.g., ‘The explanation of the term ‘convolutional’ as a mathematical operation is not directly relevant to the advancements in computer vision.’).

As seen above, one of the benefits of using an LLM as a judge is that we get detailed feedback on why our scores are what they are. For example, get a 55% for contextual relevancy because the LLM deemed some of the information unnecessary (slightly going down a rabbit hole about CNNs).

We can also use the ‘evaluate’ function from DeepEval to better automate this process. This is useful when testing your RAG on several test cases.

#run all metrics with 'evaluate' function

evaluate(test_cases=[test_case],

metrics=[contextual_precision, contextual_recall, contextual_relevancy])Generation Evaluation

Next, we evaluate our generator, which generates the responses based on the context given by the retriever. Here, we will compute 2 metrics:

- Answer Relevancy: Similar to contextual relevancy, evaluates whether the prompt template in your generator is able to instruct your LLM to give relevant outputs based on the context.

- Faithfulness: Evaluates whether the LLM used in your generator can output information that does not hallucinate or contradict any factual information presented in the retrieval context.

As before, lets initialize the metrics.

from deepeval.metrics import AnswerRelevancyMetric, FaithfulnessMetric

answer_relevancy = AnswerRelevancyMetric()

faithfulness = FaithfulnessMetric()

#compute answer relevancy and print results

answer_relevancy.measure(test_case)

print("Score: ", answer_relevancy.score)

print("Reason: ", answer_relevancy.reason)

#compute faithfulness and print results

faithfulness.measure(test_case)

print("Score: ", faithfulness.score)

print("Reason: ", faithfulness.reason)Score: 1.0 Reason: The score is 1.00 because the answer was fully relevant and addressed the question directly without any irrelevant information. Great job staying focused and informative!

Score: 1.0 Reason: Great job! There are no contradictions, so the actual output is fully faithful to the retrieval context.

As seen above, our generator operates very well (with this test case). The answer remains relevant and the model does not contradict itself. Again, we can use the ‘evaluate’ function to evaluate several test cases.

#run all metrics with 'evaluate' function

evaluate(test_cases=[test_case],

metrics=[answer_relevancy, faithfulness])A caveat of using these metrics is that they generic and only go after a few aspects of our generated output, like relevancy. But we can also create tailored metrics to determine how well our RAG solution performs in areas important to us specifically.

For example, we can pose questions like “How does my RAG handle dark humor?”, or “Is the output written at a kid-friendly level?”. For our example, let’s determine how well our RAG provides technically written responses.

from deepeval.metrics import GEval

#create evaluation for technical language

tech_eval = GEval(

name="Technical Language",

criteria="Determine how technically written the actual output is",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT])

#run evaluation

tech_eval.measure(test_case)

print("Score: ", tech_eval.score)

print("Reason: ", tech_eval.reason)Score: 0.6437823499114202 Reason: The response uses appropriate technical terminology such as ‘deep learning’, ‘image classification’, ‘object detection’, ‘image segmentation’, ‘GPUs’, and ‘FPGAs’. The explanations are clear but somewhat general, lacking specific examples or recent breakthroughs. The technical detail is moderate, mentioning both algorithmic and hardware aspects, but does not delve into particular models or methods. The writing is mostly formal and adheres to technical conventions, but the depth and specificity could be improved.

In the output above, we can see that our RAG produces somewhat technically written answers. It uses the appropriate technical terminology with clear explanations, but lacks examples. This is likely due to using abstracts as our data source, which are written at a somewhat high level.

Human Evaluation

While LLM-as-a-judge methods give us a lot a great information, they are generic and should be caveated as they do not fully assess real-world applicability. Humans however, can better explore this, as no one knows the data better than the domain experts within the organization.

Human evaluation typically reviews for correctness, justification quality, and fluency. Evaluators must determine if the output is accurate, logically connects retrieved evidence to the conclusion, is natural, and useful. It is important to keep in mind the data, user, and purpose of your RAG solution to properly address these domain-specific requirements.

Conclusion

In this article, we were able to build a RAG pipeline using open source research by leveraging FAISS, LangChain, and more. We also dove into how we can evaluate RAG solutions, evaluating both our retriever and generator. Libraries like DeepEval leverage LLM-as-a-judge metrics to build test cases and determine relevancy, faithfulness, and more. Lastly, we discussed how important human evaluation is when determining the real-world applicability or your RAG solution.

I hope you have enjoyed my article! Please feel free to comment, ask questions, or request other topics.

Connect with me on LinkedIn: https://www.linkedin.com/in/alexdavis2020/

Source link

Add comment