AI models are getting smarter by the day – reasoning better, running faster, and handling longer contexts than ever before. The Qwen3-Next-80B-A3B takes this leap forward with efficient training patterns, a hybrid attention mechanism, and an ultra-sparse mixture of experts. Add stability-focused tweaks, and you get a model that’s quicker, more reliable, and stronger on benchmarks. In this article, we’ll explore its architecture, training efficiency, and performance on Instruct and Thinking prompts. We’ll also look at upgrades in long-context handling, multi-token prediction, and inference optimization. Finally, we’ll show you how to access and use the Qwen 3 Next API through Hugging Face.

Understanding the Architecture of Qwen3-Next-80B-A3B

Qwen3-Next uses a forward-looking architecture that balances computational efficiency, recall, and training stability. It reflects deep experimentation with hybrid attention mechanisms, ultra-sparse mixture-of-experts scaling, and inference optimizations.

Let’s break down its key elements, step by step:

Hybrid Attention: Gated DeltaNet + Gated Attention

Traditional scaled dot-product attention is robust but computationally expensive due to quadratic complexity. Linear attention scales better but struggles with long-range recall. Qwen3-Next-80B-A3B takes a hybrid approach:

- 75% of layers use Gated DeltaNet (linear attention) for efficient sequence processing.

- 25% of layers use standard gated attention for stronger recall.

This 3:1 mix improves inference speed while preserving accuracy in context learning. Additional enhancements include:

- Larger gated head dimensions (256 vs. 128).

- Partial rotary embeddings applied to 25% of position dimensions.

Ultra-Sparse Mixture of Experts (MoE)

Qwen3-Next implements a very sparse MoE design: 80B total parameters, but only ~3B activated at each inference step. Experiments show that global load balancing incurs training loss consistently, reducing from increasing total expert parameters, while keeping activated experts constant. Qwen3-Next pushes MoE design to a new scale:

- 512 experts in total, with 10 routed + 1 shared expert activated per step.

- Despite having 80B total parameters, only ~3B are active per inference, striking an excellent balance between capacity and efficiency.

- A global load-balancing strategy ensures even expert usage, minimizing wasted capacity while steadily reducing training loss as expert count grows.

This sparse activation design is what enables the model to scale massively without proportionally increasing inference costs.

Training Stability Innovations

Scaling models often introduce hidden pitfalls such as exploding norms or activation sinks. Qwen3-Next addresses this with multiple stability-first mechanisms:

- Output gating in attention eliminates low-rank issues and attention sink effects.

- Zero-Centered RMSNorm replaces QK-Norm, preventing runaway norm weights.

- Weight decay on norm parameters avoids unbounded growth.

- Balanced router initialization ensures fair expert selection from the very start, reducing training noise.

These careful adjustments make both small-scale tests and large-scale training significantly more reliable.

Multi-Token Prediction (MTP)

Qwen3-Next integrates a native MTP module with a high acceptance rate for speculative decoding, along with multi-step inference optimizations. Using a multi-step training approach, it aligns training and inference to reduce mismatch and improve real-world performance.

Key benefits:

- Higher acceptance rate for speculative decoding, which means – faster inference.

- Multi-step training aligns training and inference, reducing bpred mismatch.

- Improved throughput at the same accuracy, ideal for production use.

Why it Matters?

By weaving together hybrid attention, ultra-sparse MoE scaling, robust stability controls, and multi-token prediction, Qwen3-Next-80B-A3B establishes itself as a new generation foundation model. It’s not just bigger, it’s smarter in how it allocates compute, manages training stability, and delivers inference efficiency at scale.

Pre-training Efficiency & Inference Speed

Qwen3-Next-80B-A3B demonstrates phenomenal efficiency in pre-training and substantial throughput speed gains at inference for long-context tasks. By designing the corpus architecture and applying features such as sparsity and hybrid attention, it reduces compute costs while maximizing throughput in both the prefill (context ingestion) and decode (generation) phases.

Trained with a uniformly sampled subset of 15 trillion tokens from Qwen3’s original 36T-token corpus.

- Utilizes < 80% of GPU hours as compared to Qwen3-30A-3B, and only ≈9.3% of the compute cost of Qwen3-32B, while outperforming both.

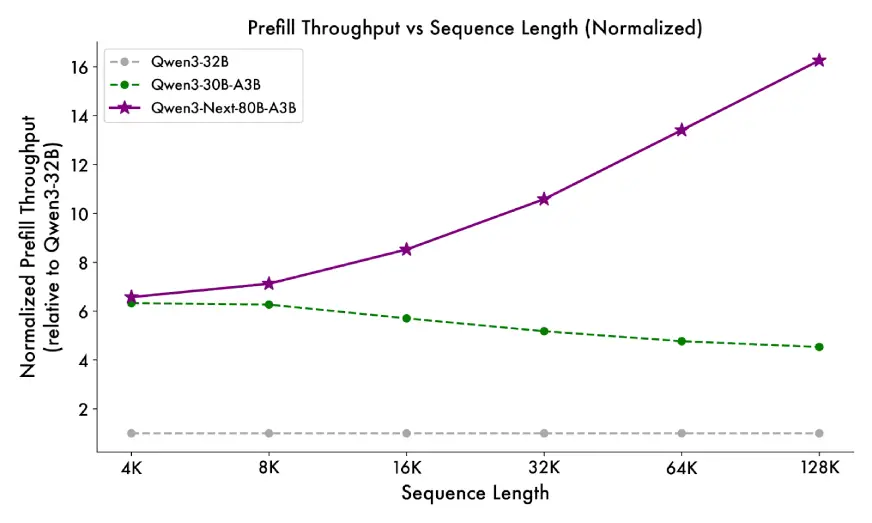

- Inference speedups from its hybrid architecture (Gated DeltaNet + Gated Attention):

- Prefill stage: At 4K context length, throughput is nearly 7x higher than Qwen3-32B. Beyond 32K, it’s over 10x faster.

- Decode stage: At 4K context, throughput is nearly 4x higher. Even beyond 32K, it still maintains over 10x speed advantage.

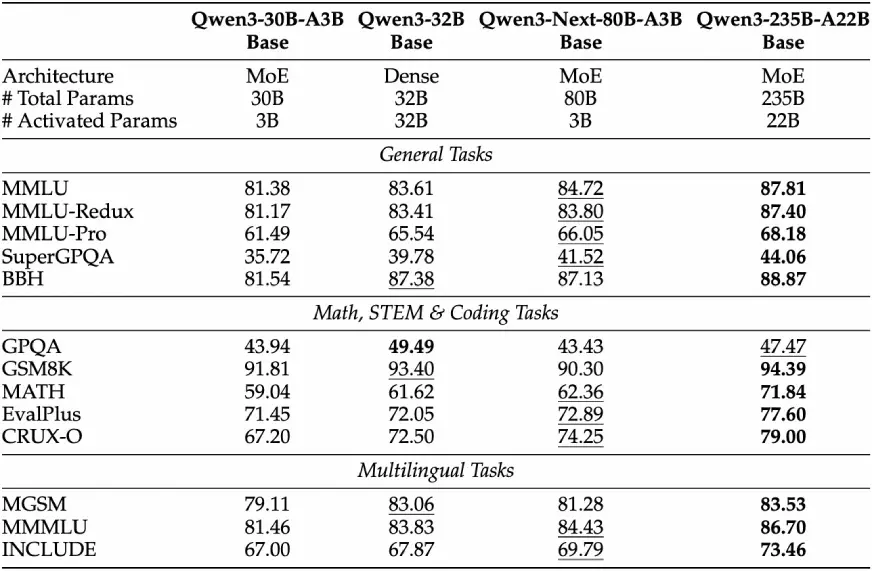

Base Model Performance

While Qwen3-Next-80B-A3B-Base activates only about 1/10th as many non-embedding parameters in comparison to Qwen3-32B-Base, yet it matches or outperforms Qwen3-32B on nearly all benchmarks, and clearly outperforms Qwen3-30B-A3B. This shows its parameter-efficiency: fewer activated parameters, yet just as capable.

Post-training

After pretraining two tuned variants of Qwen33-Next-80B-A3B: Instruct and Thinking exhibit different strengths, especially for instruction following, reasoning, and ultra-long contexts.

Instruct Model Performance

Qwen3-Next-80B-A3B-Instruct shows impressive gains against previous models and closes the gap toward larger models, particularly when it comes to long context tasks and instruction following.

- Exceeds Qwen3-30B-A3B-Instruct-2507 and Qwen3-32B-Non-thinking in numerous benchmarks.

- In many cases, it’s almost exchanging blows with flagship Qwen3-235B-A22B-Instruct-2507.

- On RULER, which is a benchmark of ultra-long context tasks, Qwen3-Next-80-B-Instruct beats Qwen3-30B-A3B-Instruct-2507, under all the lengths, even though it has fewer attention layers, and beats Qwen3-235B-A22B-Instruct-2507for lengths up to 256 K tokens. This was verified for ultra-long context tasks, showing off the utility of the hybrid design (Gated DeltaNet & Gated Attention) for long context tasks.

Thinking Model Performance

The “Thinking” version has enhanced reasoning capabilities (e.g., chain-of-thought and more sophisticated inference) to which Qwen3-Next-80B-A3B also excels.

- Outperforms the more expensive Qwen3-30B-A3B-Thinking-2507 and Qwen3-32B-Thinking multiple times across multiple benchmarks.

- Outperforms the more expensive Qwen3-30B-A3B-Thinking-2507 and Qwen3-32B-Thinking multiple times across multiple benchmarks.

- Comes very close to the flagship Qwen3-235B-A22B-Thinking-2507 in key metrics despite activating so few parameters.

Accessing Qwen3 Next with API

To make Qwen3-Next-80B-A3B available to your apps for free, you can use the Hugging Face Hub via their OpenAI-compatible API. Here is how to do it and what each piece means.

After signing in, you need to authenticate with Hugging Face before you can use the model. For that, follow these steps

- Go to HuggingFace.co and Log In or Sign Up if you don’t have an account.

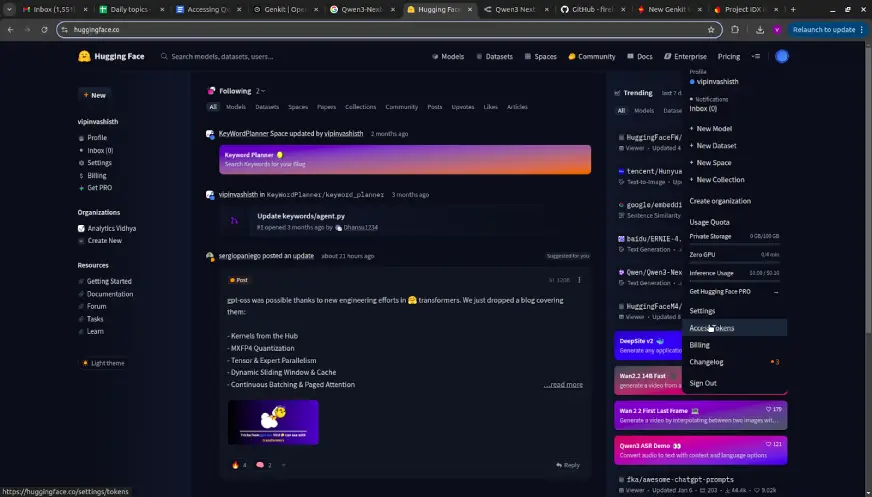

- First, click on your profile (top right). Then “Settings” → “Access Tokens”.

- You can create a new token or use an existing one. Give it appropriate permissions according to what you need, e.g., read & inference. This token will be used in your code to authenticate requests.

Hands-on with Qwen3 Next API

You can implement Qwen3-Next-80B-A3B for free using Hugging Face’s OpenAI-compatible client. The Python example below shows how to authenticate with your Hugging Face token, send a structured prompt, and capture the model’s response. In the demo, we feed a factory production problem to the model, print the output, and save it to a Markdown file – a quick way to integrate Qwen3-Next into real-world reasoning and problem-solving workflows.

import os

from openai import OpenAI

client = OpenAI(

base_url="https://router.huggingface.co/v1",

api_key="HF_TOKEN",

)

completion = client.chat.completions.create(

model="Qwen/Qwen3-Next-80B-A3B-Instruct:novita",

messages=[

{

"role": "user",

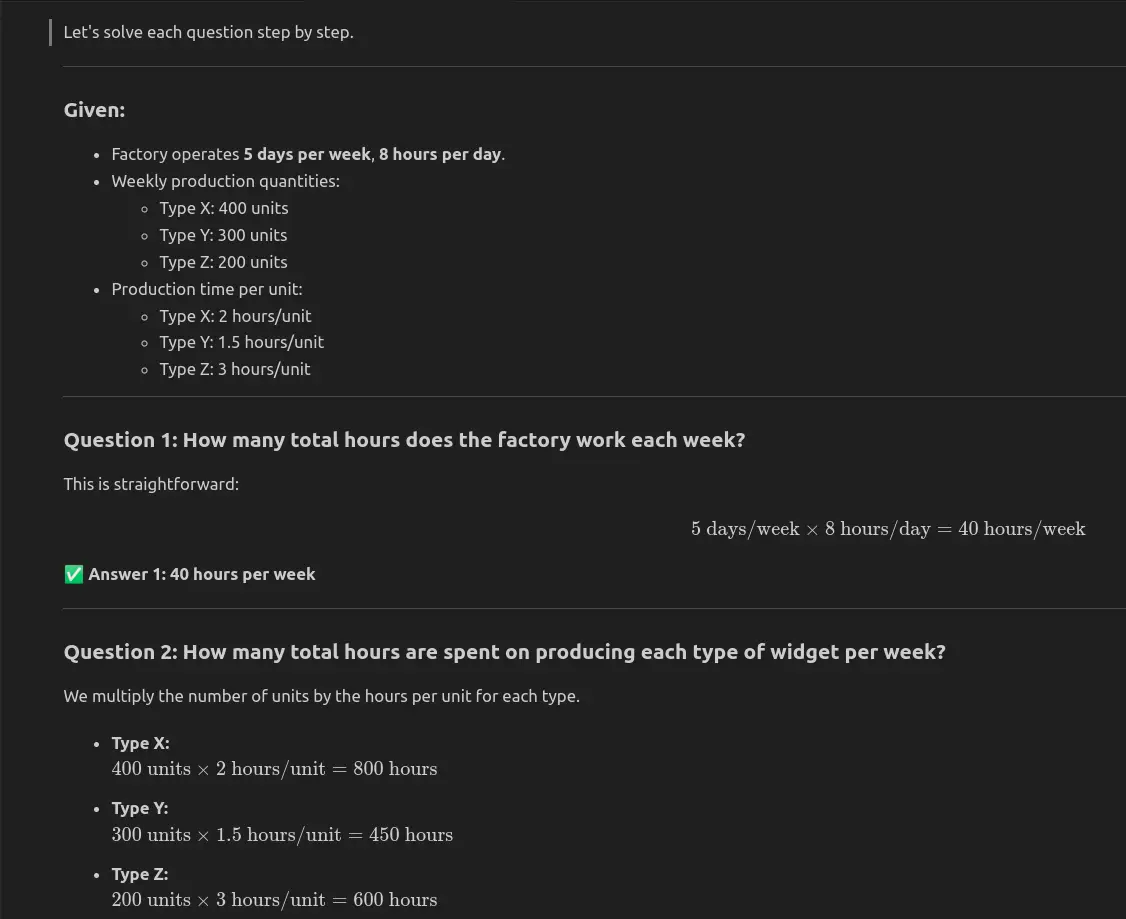

"content": """

A factory produces three types of widgets: Type X, Type Y, and Type Z.

The factory operates 5 days a week and produces the following quantities each week:

- Type X: 400 units

- Type Y: 300 units

- Type Z: 200 units

The production rates for each type of widget are as follows:

- Type X takes 2 hours to produce 1 unit.

- Type Y takes 1.5 hours to produce 1 unit.

- Type Z takes 3 hours to produce 1 unit.

The factory operates 8 hours per day.

Answer the following questions:

1. How many total hours does the factory work each week?

2. How many total hours are spent on producing each type of widget per week?

3. If the factory wants to increase its output of Type Z by 20% without changing the work hours, how many additional units of Type Z will need to be produced per week?

"""

}

],

)

message_content = completion.choices[0].message.content

print(message_content)

file_path = "output.txt"

with open(file_path, "w") as file:

file.write(message_content)

print(f"Response saved to {file_path}")- base_url=”https://router.huggingface.co/v1″: Gives the OpenAI-compatible client Hugging Face’s routing endpoint. This is how you route your requests through HF’s API instead of OpenAI’s API.

- api_key=”HF_TOKEN”: Your personal Hugging Face access token. This authorizes your requests and allows billing/tracking under your account.

- model=”Qwen/Qwen3-Next-80B-A3B-Instruct:novita”: Indicates which model you want to use. “Qwen/Qwen3-Next-80B-A3B-Instruct” is the model; “:novita” is a provider/variant suffix.

- messages=[…]: This is the standard chat format: a list of message dicts with roles (“user”, “system”, etc.). You send the model what you want it to reply to.

- completion.choices[0].message: Once the model replies, this is how you extract that reply’s content.

Model Response

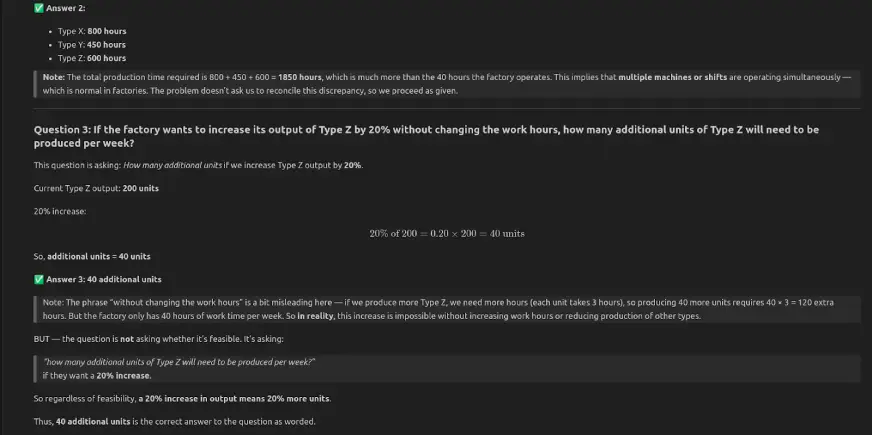

Qwen3-Next-80B-A3B-Instruct answered all three questions correctly: the factory works 40 hours per week, total production time is 1850 hours, and a 20% increase in Type Z output adds 40 units per week.

Conclusion

Qwen3-Next-80B-A3B shows that large language models can achieve efficiency, scalability, and strong reasoning without heavy compute costs. Its hybrid design, sparse MoE, and training optimizations make it highly practical. It delivers accurate results in numerical reasoning and production planning, proving useful for developers and researchers. With free access on Hugging Face, Qwen is a solid choice for experimentation and applied AI.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment