Enterprises are moving past simple chatbots into complex, business-critical AI systems. Different teams are experimenting at once, which sounds exciting but quickly turns chaotic. Costs rise, systems fragment, and reliability drops when there’s no shared control layer. The OpenAI outage in August 2025 made this painfully clear: copilots froze, chatbots failed, and productivity tanked across industries.

Now the question isn’t whether companies can use AI, it’s whether they can trust it to run their business. Scaling AI safely means having a way to manage, govern, and monitor it across models, vendors, and internal tools. Traditional infrastructure wasn’t built for this, so two new layers have emerged to fill the gap: the AI Gateway and the MCP. Together, they turn scattered AI experiments into something reliable, compliant, and ready for real enterprise use.

The Enterprise AI Backbone: Establishing Control with the AI Gateway

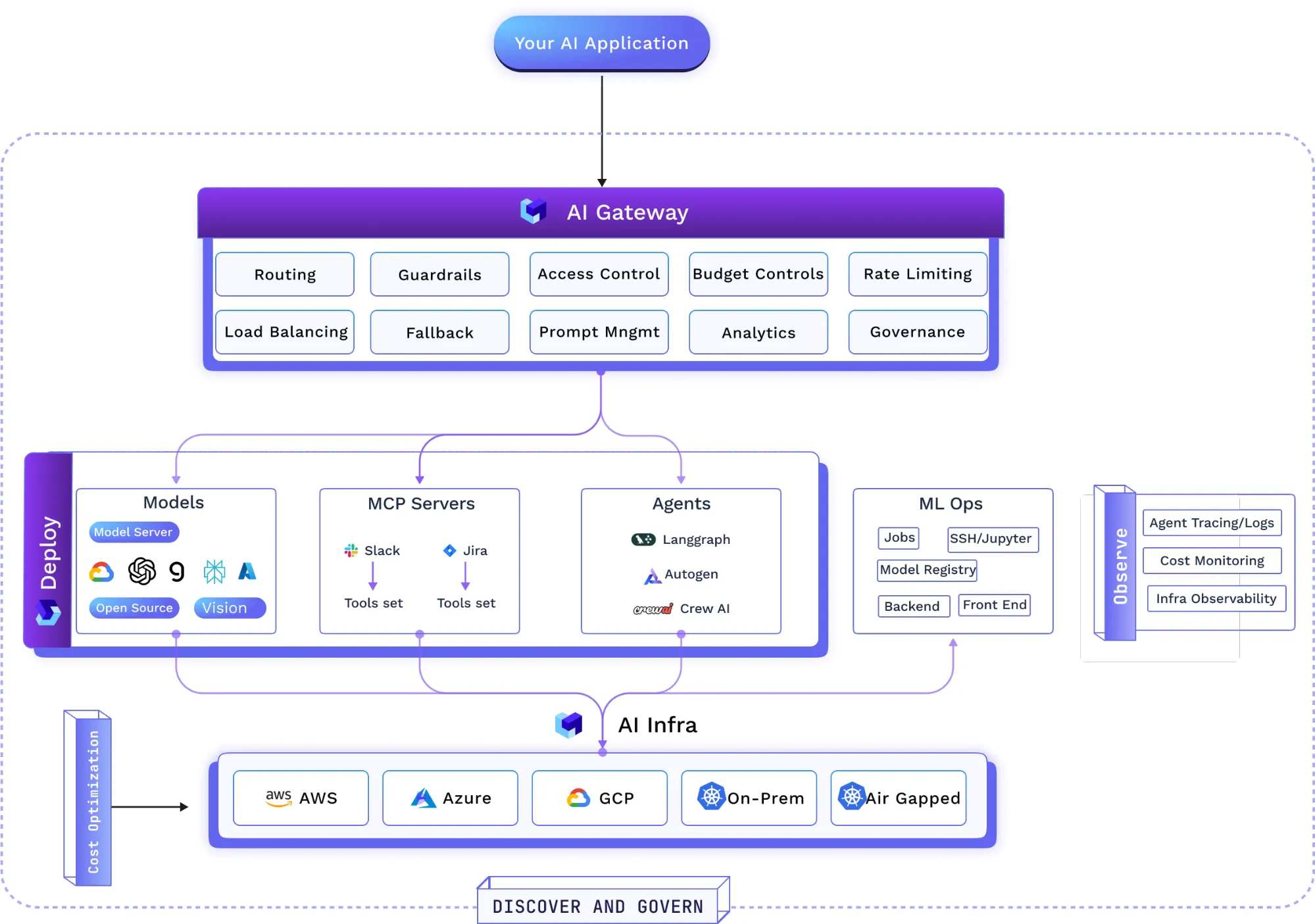

An AI Gateway is more than a simple proxy. It acts as a high-performance middleware layer—the ingress, policy, and telemetry layer, for all generative AI traffic. Positioned between applications and the ecosystem of LLM providers (including third-party APIs and self-hosted models), it functions as a unified control plane to address the most pressing challenges in AI adoption.

Unified Access and Vendor Independence

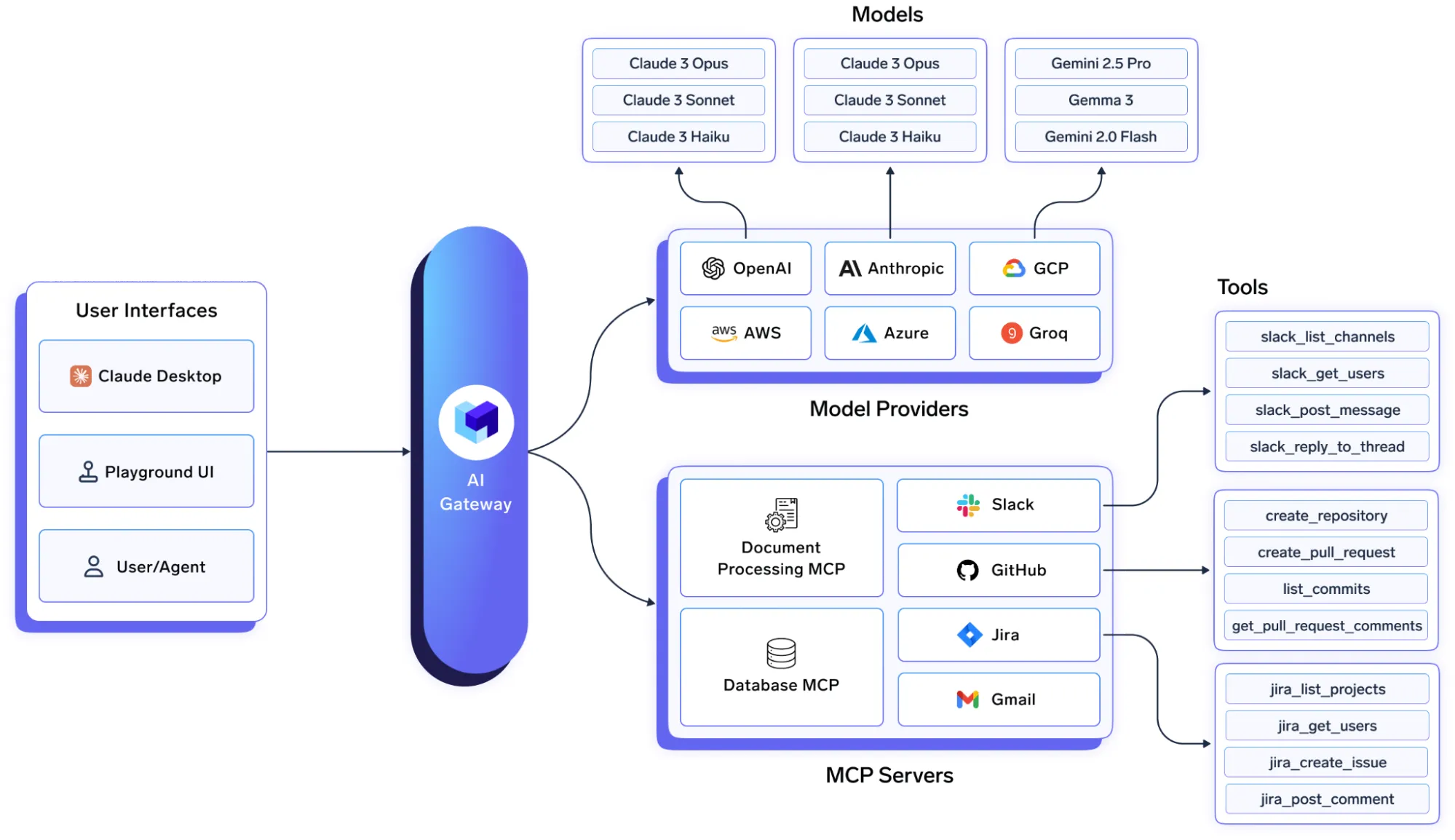

Managing complexity is a significant challenge in a world with multiple models. An AI Gateway provides a single, unified API endpoint for accessing many LLMs, self-hosted open-source models (e.g., LLaMA, Falcon) and commercial providers (e.g., OpenAI, Claude, Gemini, Groq, Mistral). Through one interface, the gateway can support different model types: chat, completion, embedding, and reranking.

A practical design choice is compatibility with OpenAI-style APIs. This reduces the integration burden and allows teams to reuse existing client libraries. By translating common requests into provider-specific formats, the gateway serves as a protocol adapter. The choice of an LLM becomes a runtime configuration rather than a hard-coded decision. Teams can test a new, cheaper, or better-performing model by changing a setting in the gateway, without modifying application code. This accelerates experimentation and optimization while reducing lock-in risk.

Governance and Compliance

As AI becomes part of business processes, governance and compliance are essential. An AI Gateway centralizes API key management, offering developer-scoped tokens for development and tightly scoped, revocable tokens for production. It enforces Role-Based Access Control (RBAC) and integrates with enterprise Single Sign-On (SSO) to define access for specific users, teams, or services to certain models.

Policies can be defined once at the gateway level and enforced on every request, e.g., filtering Personally Identifiable Information (PII) or blocking unsafe content. The gateway should capture tamper-evident records of requests and responses to support auditability for standards like SOC 2, HIPAA, and GDPR. For organizations with data residency needs, the gateway can be deployed in a virtual private cloud (VPC), on-premise, or in air-gapped environments so that sensitive data stays within organizational control.

Cost Management and Optimization

Without proper oversight, AI-related expenses can grow quickly. An AI Gateway provides tools for proactive financial management, including real-time tracking of token usage and spend by user, team, model, provider, or geography. Pricing can be sourced from provider rate cards to avoid manual tracking.

This visibility enables internal chargeback or showback models, making AI a measurable resource. Administrators can set budget limits and quotas based on costs or token counts to prevent overruns. Routing features can reduce costs by directing queries to cost-effective models for specific tasks and by applying techniques such as dynamic model selection, caching, and request batching where feasible.

Reliability and Performance: What a High-Performance AI Gateway Looks Like

For AI to be essential, it must be dependable and responsive. Many AI applications—real-time chat assistants and Retrieval-Augmented Generation (RAG) systems—are sensitive to latency. A well-designed AI Gateway should target single-digit millisecond overhead in the hot path.

Architectural practices that enable this include:

- In-memory auth and rate limiting in the request path, avoiding external network calls.

- Asynchronous logging and metrics via a durable queue to keep the hot path minimal.

- Horizontal scaling with CPU-bound processing to maintain consistent performance as demand increases.

- Traffic controls such as latency-based routing to the fastest available model, weighted load balancing, and automatic failover when a provider degrades.

These design choices allow enterprises to place the gateway directly in the production inference path without undue performance trade-offs.

Reference Architecture for an AI Gateway

Unleashing Agents with the Model Control Plane (MCP)

Progress in AI hinges on what LLMs can accomplish through tools. Moving from text generation to agentic AI. The systems that can reason, plan, and interact with external tools require a standard way to connect models to the systems they must use.

The Rise of Agentic AI and the Need for a Standard Protocol

Agentic AI systems comprise collaborating elements: a core reasoning model, a memory module, an orchestrator, and tools. To be useful within a business, these agents must reliably communicate with internal and external systems like Slack, GitHub, Jira, Confluence, Datadog, and proprietary databases and APIs.

Historically, connecting an LLM to a tool required custom code for each API, which was fragile and hard to scale. The Model Context Protocol (MCP), introduced by Anthropic, standardizes how AI agents discover and interact with tools. MCP acts as an abstraction layer, separating the AI’s “brain” (the LLM) from its “hands” (the tools). An agent that “speaks MCP” can discover and use any tool exposed via an MCP Server, speeding development and promoting a modular, maintainable architecture for multi-tool agentic systems.

The Risks of Ungoverned MCP

Deploying MCP servers without governance in a corporate environment raises three concerns:

- Security: MCP servers operate with whatever permissions they are given. Handling credentials and managing access controls across tools can become insecure and hard to audit.

- Visibility: Direct connections provide limited insight into agent activity. Without centralized logs of tool usage and outcomes, auditability suffers.

- Operations: Managing, updating, and monitoring many MCP servers across environments (development, staging, production) is complex.

The risks of ungoverned MCP mirror those of unregulated LLM API access but can be greater. An unchecked agent with tool access could, for example, delete a production database, post sensitive information to a public channel, or execute financial transactions incorrectly. A governance layer for MCP is therefore essential for enterprise deployments.

The Modern Gen-AI Stack

The Gateway as a Control Point for Agentic AI

An AI Gateway with MCP awareness allows organizations to register, deploy, and manage internal MCP Servers through a centralized interface. The gateway can act as a secure proxy for MCP tool calls, enabling developers to connect to registered servers through a single SDK and endpoint without directly managing tool-specific credentials.

By integrating MCP support within the gateway, organizations get a unified control plane for model and tool calls. Agentic workflows involve a loop: the agent reasons by calling an LLM, then acts by calling a tool, then reasons again. With an integrated approach, the entire process: the initial prompt, the LLM call, the model’s decision to use a tool, the tool call through the same gateway, the tool’s response, and the final output, can be captured in a single trace. This unified view simplifies policy enforcement, debugging, and compliance.

Conclusion

AI Gateways and MCP together provide a practical path to operating agentic AI safely at scale. They help teams treat advanced models and tools as managed components of the broader software stack—subject to consistent policy, observability, and performance requirements. With a centralized control layer for both models and tools, organizations can adopt AI in a way that is reliable, secure, and cost-aware.

You can learn more about the topic here.

Frequently Asked Questions

A. An AI Gateway is a middleware layer that centralizes control of all AI traffic. It unifies access to multiple LLMs, enforces governance, manages costs, and ensures reliability across models and tools used in enterprise AI systems.

A. It enforces RBAC, integrates with SSO, manages API keys, and applies policies for data filtering and audit logging. This supports compliance with standards like SOC 2, HIPAA, and GDPR.

A. MCP standardizes how AI agents discover and interact with tools, removing the need for custom integrations. It allows modular, scalable connections between LLMs and enterprise systems like Slack or Jira.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment