As the field of artificial intelligence shifts and evolves, Large Language Model (LLM) datasets have emerged as the bedrock of transformational innovation. Whether you’re fine-tuning GPT models, building domain-specific AI assistants, or conducting detailed research, quality datasets can be the difference between success and failure. Today, we will be deep-diving into one of GitHub’s most robust repositories of LLM datasets, which transforms the way developers think about training and fine-tuning LLMs.

Why Data Quality Matters More than Ever?

The AI community has learned an important lesson: data is the new gold. If computational power and model architectures are the flashy headlines, then the training and fine-tuning datasets determine the real-world performance of your AI systems. Data that is not of good quality leads to hallucinations, biased outputs, and erratic model behavior. This, in turn, leads to the complete derailment of an entire project.

The mlabonne/llm-datasets repository has become the premier destination for developers who are searching for normalized, high-quality datasets for use in non-training applications. This is not just another example of a random collection of datasets. This is a carefully curated library that puts three important features that differentiate good datasets from great ones.

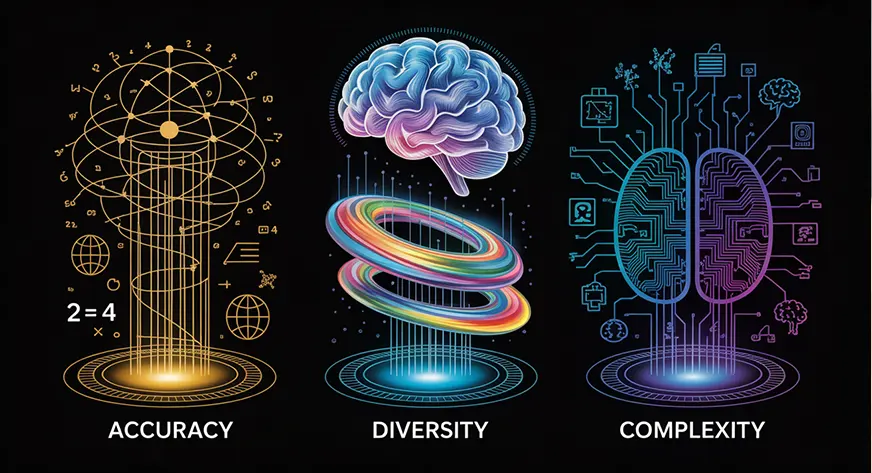

The Three Exceptional Pillars of LLM Datasets

Accuracy: The Foundation for Trustworthy AI

Each example in a high-quality dataset must be factually accurate and related to the relevant instruction. This means having valuable validation workflows, such as a mathematical solver for numerical problems or unit testing for a code-based dataset. It doesn’t matter how complex the model architecture is. Without accuracy, the output will always be misleading.

Diversity: the Range of Human Knowledge

A truly useful dataset has a wide range of use cases so that your model is not running into out-of-distribution situations. A diverse dataset provides better generalization, which allows your AI systems to better handle unexpected queries. This is especially relevant for general-purpose language models, which should perform well across a variety of domains.

Complexity: Beyond Simple Question-Answer Pairings

Modern datasets include complex reasoning techniques, such as prompting strategies that require models to conduct stepwise reasoning and explanation with justifications. This complexity is needed in human-like AIs that are required to operate in nuanced real-world situations.

Top LLM Datasets for Different Categories

General-purpose Powerhouses

The repository contains some remarkable general-purpose datasets that include balanced combinations of chat, code, and mathematical reasoning:

- Infinity-Instruct (7.45M samples): It is the gold standard for current evolved high-quality samples produced. BAAI created the dataset in August 2024 from an open-source dataset with advanced evolutionary techniques to produce advanced training samples.

Link: https://huggingface.co/datasets/BAAI/Infinity-Instruct - WebInstructSub (2.39M samples): This dataset uniquely captures the essence of a dataset; it retrieves documents from Common Crawl, browses the document to extract question-answer pairs, and creates sophisticated processing pipelines to process them. The dataset, which is in the MAmmoTH2 publication, illustrates how web-scale data are created into high-quality training examples.

Link: https://huggingface.co/datasets/chargoddard/WebInstructSub-prometheus - The-Tome (1.75M samples): It was created by Arcee AI and emphasizes instruction following. It is noted for its reranked and filtered collections that emphasize clear instruction-following by the user. This is very important for production AI systems.

Link: https://huggingface.co/datasets/arcee-ai/The-Tome

Mathematical Reasoning: Solving the Logic behind the problem

Mathematical reasoning continues to be one of the most difficult areas for language models. For this category, we have some targeted datasets to combat this critical issue:

- OpenMathInstruct-2 (14M samples): It uses Llama-3.1-405B-Instruct to create augmented samples from established benchmarks, such as GSM8K and MATH. This dataset, which was released by Nvidia in September 2024, represents the most cutting-edge of math AI training data.

Link: https://huggingface.co/datasets/nvidia/OpenMathInstruct-2 - NuminaMath-CoT (859k samples): It was distinguished as powering the first progress prize winner of the AI Math Olympiad. It highlighted chain-of-thought reasoning and provided tool-integrated reasoning versions in the dataset for use cases that have greater problem-solving potential.

Link: https://huggingface.co/datasets/AI-MO/NuminaMath-CoT - MetaMathQA (395k samples): It was novel in that it rewrote math questions from multiple perspectives to create various training conditions for greater model robustness in math domains.

Link: https://huggingface.co/datasets/meta-math/MetaMathQA

Code Generation: Bridging AI and Software Development

The programming area needs dedicated datasets that understand aspects of syntax, logic, and best practices across different programming languages:

Advanced Capabilities: Function Calling and Agent Behavior

For the development of modern applications with AI, there is a need for complex function-calling methods, and the user must also exhibit agent-like disposition.

Real-World Conversation Data: Learning from Human Interaction

To create engaging AI assistants, it is critical to capture natural human communication patterns:

- WildChat-1M (1.04M samples): It samples real conversations users had with advanced language models, such as GPT-3.5 and GPT-4, showing authentic interactions and, ultimately, evidencing actual usage patterns and expectations.

Link: https://huggingface.co/datasets/allenai/WildChat-1M - Lmsys-chat-1m: It tracks conversations with 25 unique language models collected from over 210,000 unique IP addresses, and is one of the largest datasets for real-world conversation.

Link: https://huggingface.co/datasets/lmsys/lmsys-chat-1m

Preference Alignment: Teaching AI to Match Human Values

Preference alignment datasets are more than mere instruction-following to make sure AI systems have aligned values and preferences:

The Github repository not only provides LLM datasets, but also includes a full set of tools for dataset generation, filtering, and exploration:

Data Generation Tools

- Curator: Simplifies synthetic data generation with excellent batch support

- Distilabel: Complete toolset for generating both supervisor full trace (SFT) and data provider observational (DPO) data

- Augmentoolkit: Converts unstructured text to distinct structured datasets using multiple model types

Quality Control and Filtering

- Argilla: Collaborative space to perform manual dataset filtering and data annotation

- SemHash: Performs antipattern fuzzy deduplication using model embeddings that have been mostly distilled

- Judges: LLM judges library used for completely automated quality checks

Data Exploration and Analysis

- Lilac: A very rich dataset exploration and quality assurance tool

- Nomic Atlas: A Software application that actively discovers knowledge from instructional data.

- Text-clustering: Framework for clustering textual data in a meaningful way.

Best Practices for Dataset Selection and Implementation

When selecting datasets, keep these strategic perspectives in mind:

- It is good practice to explore general-purpose datasets like Infinity-Instruct or The-Tome, which provide a good model foundation with broad coverage and reliable performance on multiple tasks.

- Layer on specialized datasets relative to your use case. For example, if your prototype requires mathematical reasoning, then incorporate datasets like NuminaMath-CoT. If your model is focused on code generation, you may want to check out more thoroughly tested datasets like Tested-143k-Python-Alpaca.

- When you are building user-facing applications, do not forget preference alignment data. Datasets like Skywork-Reward-Preference ensure your AI systems behave in ways that align with user expectations and values.

- Use the quality assurance tools we provide. The emphasis on accuracy, diversity, and complexity outlined in this repository is backed by tools to help you uphold these standards in your own datasets.

Conclusion

Ready to use these amazing datasets for your project? Here is how you can get started;

- Go to the repository at github.com/mlabonne/llm-datasets and see all the available resources

- Think about what you need, based on your application (general purpose, math, coding, etc.)

- Pick datasets that meet your requirements and use-case quality benchmarks

- Use the tools we recommended for filtering the datasets and assuring quality

- Add back to the dataset family by sharing improvements or new datasets

We live in incredible times for AI. The pace of progress of AI is accelerating, but having great datasets that are well curated is still essential to success. The datasets in this Github repository have everything you need to build powerful LLMs, which are also capable, accurate, and human-centered.

Login to continue reading and enjoy expert-curated content.

Source link

Add comment